Why distributed AI governance suddenly matters for open‑source blockchains

When you plug machine learning models into smart contracts and DAOs, you create a new kind of power center: whoever controls the model and its data effectively controls protocol behavior. For open-source blockchain projects, that’s a direct clash with the ethos of transparency and decentralization. Distributed AI governance is an attempt to move decisions about models, training data, hyperparameters and deployment out of a single team’s hands and into verifiable, collectively managed processes on-chain. In practice это значит (yes, mixing worlds a bit): rules for AI are encoded as smart contracts, enforcement is automated, and communities can audit and contest how models are trained, updated and used in production-grade protocols.

Historical background: from opaque models to verifiable AI pipelines

Early blockchain + AI: cool demos, terrible governance

The first wave of “AI + blockchain” around 2016–2019 была в основном маркетингом: prediction markets with black-box oracles, trading bots wrapped in smart contracts, crypto-ML startups promising “decentralized intelligence.” Governance was almost entirely off-chain. A core team hosted the models, tweaked them at will, and the chain only saw final outputs. Technically это работало, но с точки зрения децентрализации это выглядело как централизованный API, просто выставленный наружу через контракт. Any notion of a decentralized AI governance platform for blockchain was more buzzword than reality.

As DeFi matured (AMMs, lending markets, derivatives), protocols started to lean on oracles and risk models more heavily. Once protocol TVL crossed billions, the governance implications became obvious: if one ML risk engine or anomaly detector can throttle liquidity or trigger circuit breakers, whoever controls that engine is a systemic actor. But the tooling remained basic: multisigs approving upgrades, off-chain committees reviewing models, and forum discussions with no formal binding logic on-chain.

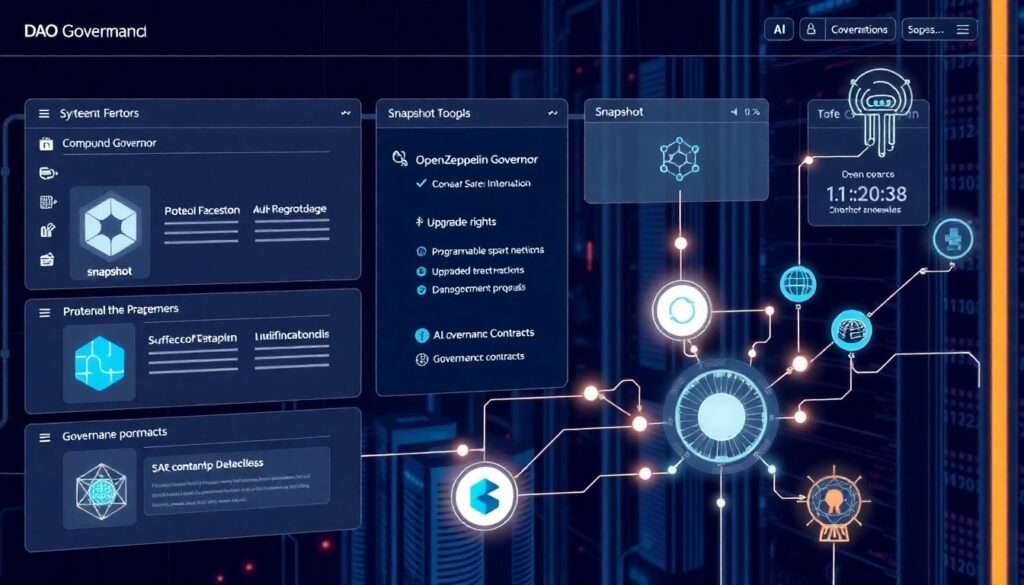

Rise of DAOs and on-chain policy for AI components

The DAO tooling boom (Compound Governor, OpenZeppelin Governor, Snapshot + Safe, etc.) gave open-source projects a template for structured decision-making. Teams began encoding protocol parameters and upgrade rights directly into governance contracts. At that point, integrating AI systems into governance workflows became the natural next step: proposals to deploy a new model, to switch off an old one, to modify data feeds, or to change inference frequency could be routed through the same mechanisms that already controlled interest rate curves or fee switches.

Parallel to that, enterprises experimenting with permissioned chains and “enterprise blockchain AI governance solutions” started demanding audit trails: who approved this model, what data was used, which compliance requirements were checked? That pushed the space toward explicit, machine-readable policies and verifiable logs of every AI-related governance event. Open-source ecosystems then picked up these patterns, adapting them to more adversarial, token-holder-driven environments, where sybil resistance and collusion became first-class concerns.

Basic principles of distributed AI governance

On-chain verifiability instead of blind trust

The core idea is simple: treat AI models as first-class protocol components with explicit, auditable lifecycle steps. Each step — proposal, review, testing, deployment, rollback — is mirrored on-chain. Instead of “the team updated the model and announced it on Discord,” you get a traceable chain of governance decisions: who proposed the new architecture, what evaluations were attached, which addresses voted, and under what quorum rules it passed. In a robust open source blockchain AI governance framework, the model’s metadata (version hash, training code commit, dataset signatures) is anchored to the chain, even if the heavy artifacts stay on IPFS, Arweave or other storage.

This doesn’t magically solve model interpretability, but it creates a secure accountability layer: if a model starts behaving maliciously or biased, stakeholders can pinpoint the exact decision path that led to its deployment. That’s crucial for both permissionless DeFi protocols and regulated, consortium-style ledgers trying to blend AI automation with compliance obligations.

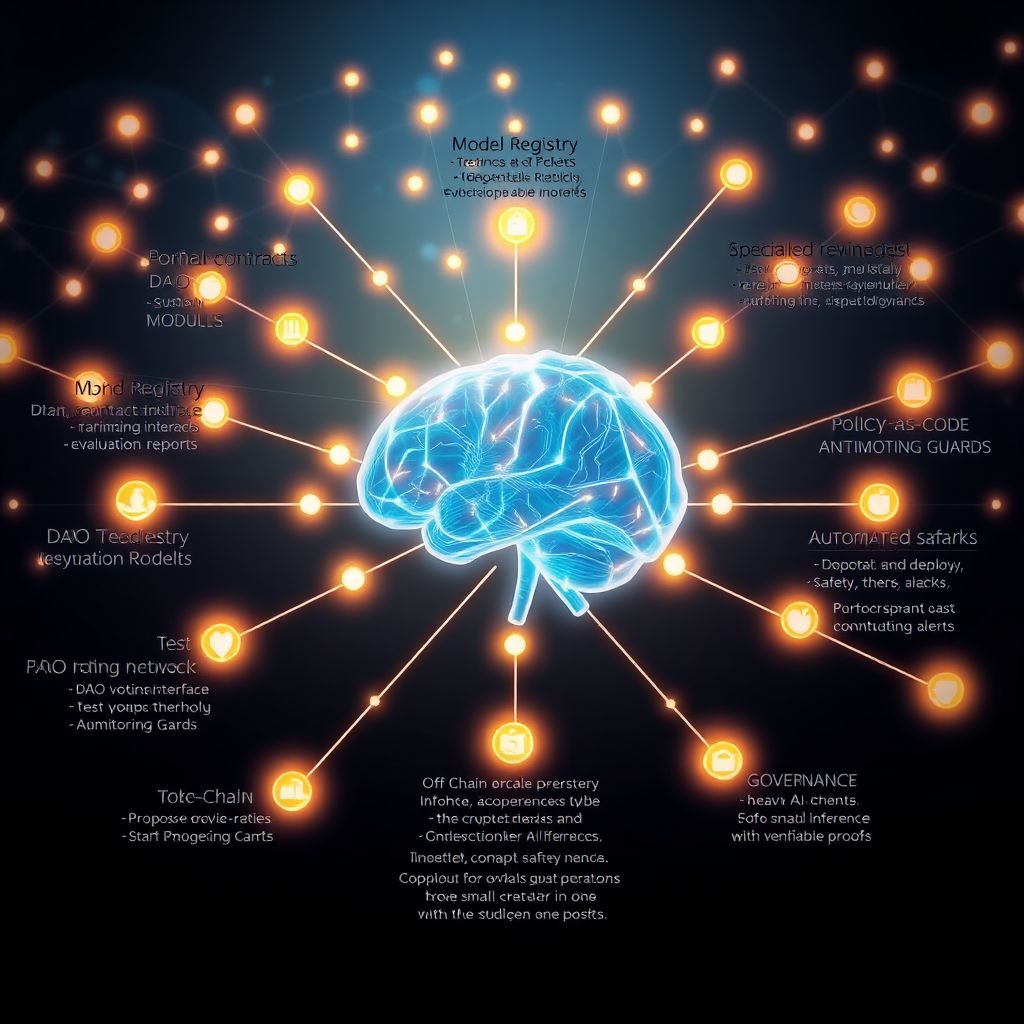

Multi-stakeholder control, not just token-voting

Pure token-voting is fragile when AI is involved. Large token holders might prefer short-term profit even if an unsafe model increases systemic risk. Distributed AI governance usually combines several control layers:

— Token-weighted votes for broad legitimacy and economic signaling.

— Role-based access control (RBAC) for specialized functions (e.g., risk stewards, model reviewers, compliance signers).

— Time locks and staged rollouts (canary deployments, shadow mode) to catch issues before full activation.

You can think of it as shared custody over AI components. A decent decentralized AI governance platform for blockchain will typically implement separate “gates”: one for proposing and reviewing models, one for technical audits, and another for economic approval. This is closer to how critical infrastructure in traditional finance is controlled, just encoded as immutable governance logic.

Policy as code for AI lifecycle

Another pillar: “policy as code.” Instead of vague human-readable guidelines, you encode explicit constraints that the AI pipeline must respect:

— Maximum frequency and magnitude of parameter changes driven by an ML model.

— Mandatory simulation windows and stress tests before enabling an updated model on mainnet.

— Requirements to log input/output distributions and performance metrics into verifiable storage.

In enterprise settings this looks like enterprise blockchain AI governance solutions integrating with MLOps stacks, compliance engines and key management systems. In open-source DeFi, it looks like governance modules that won’t allow a new model to go live before its evaluation report (even if off-chain) has been anchored and checkpointed, and a minimum challenge period has passed. Policy as code doesn’t guarantee perfect behavior, but it raises the cost of reckless or malicious upgrades.

Different approaches to AI governance in open-source blockchain projects

1. Centralized governance with public artifacts

The simplest model, still common today, is: the core team owns the AI pipeline, publishes the model weights and inference code as open source, and maybe anchors hashes on-chain. Decisions about what to ship remain off-chain and centralized. This gives speed and technical coherence: a small, specialized team can iterate aggressively. It also makes incident response relatively easy, since there’s a clear operational owner. However, it contradicts decentralization claims: token holders and users effectively trust an opaque committee, and “governance” becomes more of a signaling process than an enforcement one.

In this approach, AI governance tools for Web3 and DeFi projects are often afterthoughts: dashboards, telemetry, and some basic voting hooks, but no hard stops. It’s attractive for early-stage protocols trying to find product-market fit, but over time it creates regulatory and reputational risk, because the on-chain story (“community-owned, permissionless system”) diverges from the off-chain reality (single point of AI control).

2. DAO-centric, on-chain-controlled AI modules

The opposite extreme is to wire every meaningful AI decision through a DAO. Here, a smart contract registry maintains references to approved models, oracle endpoints, or inference services. To change the active model, someone submits a proposal with metadata and evaluation proofs; token holders or delegates vote; if it passes, the registry updates, and contracts consuming AI outputs automatically read from the newly-approved endpoint.

This offers strong alignment with decentralization principles, but with trade-offs:

— Governance latency: time to change or patch a model is constrained by proposal windows, voting durations and timelocks.

— Expertise bottlenecks: most voters can’t independently assess ML architectures or subtle data quality issues.

— Voter apathy: routine adjustments drown in governance noise, leading to rubber-stamping or capture by a few active participants.

DAO-centric systems are compelling for long-lived, high-value DeFi protocols, especially when paired with expert councils or committees that pre-screen proposals. However, they can be overkill for smaller open-source projects where governance overhead can stall development.

3. Hybrid council + DAO + automation

The most practical pattern emerging today is a hybrid. A DAO retains ultimate authority but delegates specific, time-bounded powers to specialized bodies and automated constraints. Concretely:

— A “Model Governance Council” (elected by the DAO) can approve low-risk model updates within defined risk thresholds.

— Hard risk boundaries (max leverage, liquidation thresholds, credit limits) remain under full DAO control and can’t be changed by the council alone.

— Monitoring contracts automatically throttle or disable AI modules if they breach predefined performance or safety metrics, triggering an emergency review.

This hybrid model recognizes that AI operations require continuous tuning, while also acknowledging that core risk parameters must not move too quickly or under the influence of a small, unconstrained clique. It also allows the project to plug in external consulting services for AI governance in blockchain, which can support the council with audits, benchmark design and stress testing, without giving those consultants direct upgrade powers.

How these approaches compare in practice

Security and resilience

Centralized governance with public artifacts is cheapest and fastest but has a single governance chokepoint: compromise the core team or its keys, and the AI system can be weaponized. DAO-centric designs distribute this risk but open up new attack surfaces: governance capture, vote-buying, and social engineering of token holders. Hybrid models aim for defense in depth: attackers must simultaneously bypass coded limits, capture or coerce expert councils, and manipulate token governance. For systemically important DeFi or cross-chain infrastructure, that layered structure tends to be more robust.

From an engineering standpoint, an open source blockchain AI governance framework that supports modular roles, pluggable voting mechanisms and configurable safety rails can give protocols a way to evolve from centralized to hybrid and then to more fully on-chain control as their security posture and community mature.

Scalability and maintenance overhead

Pure DAO control doesn’t scale if every model retrain or hyperparameter tweak requires a full on-chain vote. That’s especially true when AI systems need frequent updates to adapt to new market conditions, adversarial strategies or shifts in user behavior. Centralized governance obviously scales better operationally, but puts an implicit ceiling on how decentralized the protocol can credibly claim to be.

Hybrid systems mitigate this tension by categorizing change types:

— Routine, low-risk updates: handled by delegated roles with strict rate limits and reporting obligations.

— Medium-risk changes: require both delegated approval and delayed, vetoable notification to the DAO.

— High-risk or structural changes: full DAO votes, extensive impact analysis, and long timelocks.

This tiered approach lets AI components evolve at the pace of the environment while preserving community oversight for decisions that reshape the protocol’s risk surface.

Regulatory and reputational angles

Regulators increasingly care about explainability, accountability and control for AI systems that affect financial outcomes. Enterprise blockchain AI governance solutions usually lean into this by providing strong identity, auditable workflows, and documented approval chains. Public open-source ecosystems can’t simply copy-paste those mechanisms, but they can reuse the ideas: every on-chain AI-related decision becomes part of an immutable log, and the presence of defined governance roles and coded policies demonstrates that there is a responsible governance architecture, not unbounded automation.

In public markets, protocols that can show they have robust AI oversight — even if it’s token-based — may gain a trust premium over projects that treat AI as a black box bolted onto a governance-minimal DeFi protocol. Conversely, pretending to be fully decentralized while running a tightly held AI backend is a reputational time bomb once something goes wrong and the centralization becomes obvious.

Examples and emerging implementation patterns

Model registries and on-chain approvals

A common building block is an on-chain model registry contract. Each entry in the registry includes references to the model artifact (hash, storage pointer), training commit, evaluation artifacts, and a status flag (“proposed,” “approved,” “deprecated,” “revoked”). Other protocols or internal contracts can be configured to only interact with models in the “approved” state. This gives a simple yet powerful integration point: governance controls state transitions, while AI pipelines simply publish new candidates.

In a more advanced setup, you can have multiple registries: one for risk models, another for pricing models, another for anomaly detectors. Each registry might have distinct governance rules and reviewer populations. This is where specialized AI governance tools for Web3 and DeFi projects become useful: they provide frontends, monitoring and analysis layers that speak both governance language (proposals, votes, quorums) and ML language (ROC curves, drift statistics, fairness metrics), helping communities understand what they are actually approving.

Oracles and off-chain computation networks

For heavy models, it’s often impractical to run inference entirely on-chain. Instead, protocols rely on oracle networks or specialized off-chain computation layers. In distributed AI governance, who gets to be an oracle operator and how their behavior is verified becomes critical. Approaches vary:

— Permissioned sets of oracle operators, whitelisted via governance, with on-chain slashing for misbehavior.

— Decentralized computation networks with staking, where operators run verifiable inference tasks and submit proofs or commitments.

— Hybrid schemes where a small set of reference oracles is overseen by a DAO, but additional independent verifiers monitor them.

Open-source projects experimenting with these designs often borrow from enterprise settings — e.g., multi-signature attestation workflows or risk committees — but adapt them to anonymous operators and open participation. That blend is where much of the innovation in distributed model execution is happening today.

External audits and expert review loops

Open governance doesn’t automatically create expertise. Many projects therefore rely on external specialists for review, especially for safety-critical or regulatory-sensitive AI modules. Rather than treating audits as one-off PDF reports, more advanced ecosystems encode them into the governance process:

— A proposal for a new AI component includes at least one independent assessment.

— The assessment’s hash and signers are recorded on-chain.

— A minimum number of expert approvals may be required before a DAO vote is even possible.

Projects that lack permanent internal ML teams can contract consulting services for AI governance in blockchain to bootstrap this layer. The key is to avoid turning consultants into de facto governors: they provide structured input, not unilateral decision power. Over time, some of this expertise can migrate into in-protocol committees or working groups, reducing external dependency.

Common misconceptions about distributed AI governance

“Putting it on-chain automatically makes it fair and safe”

One widespread misunderstanding is that simply anchoring AI model hashes or decisions on-chain solves governance problems. Transparency is valuable, but if decision rights remain concentrated — for example, in a team-controlled multisig — you’ve just wrapped centralization in a veneer of blockchain. Distributed AI governance has to deal with incentive structures, capture resistance, and clear separation of powers, not just record-keeping. Well-designed systems combine a transparent audit trail with explicit constraints on who can initiate actions and under what checks and balances.

Another nuance: fairness or lack of bias isn’t guaranteed by decentralization alone. If most token holders are aligned around profit maximization and have little exposure to harmed minorities, they may willingly approve biased models. That’s why principled governance sometimes needs hard-coded safety rails and independent risk or ethics committees with veto rights, calibrated to each protocol’s mission and regulatory exposure.

“Full democratization is always better than expert control”

There is also an opposite myth: that the only “truly decentralized” AI governance is one where everyone votes on everything. In practice, complex model evaluation requires technical literacy that most participants don’t have the time or background to acquire. Forcing raw token-voting on every technical decision either produces governance fatigue or leads people to follow a handful of vocal leaders, effectively recreating centralized decision-making under a different label.

A more realistic target is layered governance, where domain experts have well-defined, auditable powers, and the broader community has both oversight and the ability to reconfigure those powers if misused. Hybrid council + DAO models are not a compromise in principle; they’re an acknowledgment that different types of decisions demand different types of legitimacy and review depth.

“Open source automatically guarantees good governance”

Finally, many open-source blockchain projects assume that making code and models public is inherently sufficient. Open source is necessary but not sufficient. Without structured processes to evaluate, approve, and monitor AI components, you can end up with a messy, forked landscape where no one is clearly accountable for what actually runs on mainnet. An open source blockchain AI governance framework is about more than GitHub visibility: it’s about aligning incentives, automating guardrails, and ensuring that as models evolve, the pathway from idea to production remains collectively owned and inspectable.

In other words, distributed AI governance is not a checkbox but an evolving discipline. As models get more capable and protocols integrate AI deeper into their core logic, the difference between superficial decentralization and carefully architected, multi-layered governance will decide which systems remain resilient — and which ones become cautionary tales.