Why Web3 Needs Autonomous Agents in Digital Marketing

From manual hustle to on‑chain autopilot

Web3 moves 24/7, but most marketing teams still operate like it’s Web2: spreadsheets, Discord mods burning out, and campaigns that can’t react in real time to on‑chain data. Autonomous AI agents for Web3 marketing close this gap: they are persistent software entities that watch wallets, smart contracts, and social data, then trigger concrete actions (content, ads, incentives) without waiting for a human to click a button. Instead of yet another dashboard, you get a set of semi‑independent “micro‑marketers” that live close to the protocol layer and talk to users right when something meaningful happens on‑chain.

The real shift is that these agents don’t just schedule posts; they interpret context: who just bridged liquidity, who minted an NFT from a partner drop, which DAO passed a proposal. They can react by sending on‑chain rewards, DMing the right person, or spinning up a temporary campaign in a niche community. Done well, they turn chaotic crypto user flows into targeted, personalized experiences that feel almost like a human community manager who never sleeps and fully understands your smart contracts.

Required Stack and Tools for Autonomous Web3 Marketing

Core components: data, brains, actions, and identity

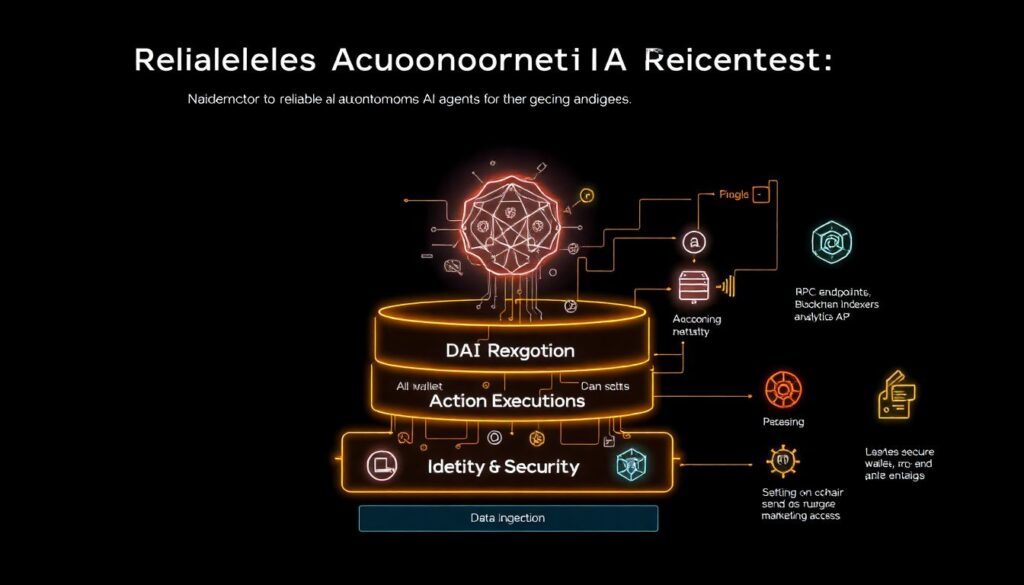

To build reliable autonomous AI agents for Web3 marketing, you need four core layers: data ingestion, AI reasoning, action execution, and identity/security. First, you’ll pull data from RPC endpoints, indexers, and analytics APIs so your agents see wallet activity, token transfers, swaps, and NFT events. Next, you plug this feed into a reasoning layer: large language models, vector databases for memory, and rule engines that encode guardrails (budget caps, forbidden actions, compliance constraints). Third, you connect action channels: Discord, Telegram, email, X (Twitter), plus smart contract interfaces so your agents can trigger on‑chain rewards or campaign logic. Finally, you anchor your agents’ identity with wallets, API keys, and permission scopes, treating them like semi‑trusted team members whose behavior is cryptographically auditable.

In practice, your web3 digital marketing automation tools might include: a multi‑chain indexer (e.g., The Graph‑like services or bespoke ETL), an LLM host with function‑calling support, a serverless runtime for agent loops, and integrations with CRM and attribution platforms. You’re not building a monolith; you’re creating a modular nervous system that can be extended with new “skills” over time. The better you design this architecture from day one, the easier it is to spin up new agents specialized in retention, partnerships, or influencer outreach without reinventing infrastructure each time.

Optional but powerful: nonstandard tools worth considering

Beyond the usual analytics and CRM stack, it’s worth exploring some less obvious tools that give your agents superpowers. One unconventional piece is using decentralized storage (IPFS, Arweave) as a shared memory layer where agents publish and subscribe to campaign states, making coordination transparent and tamper‑evident. Another is integrating real‑time simulation environments where agents can “dry‑run” actions—such as price‑sensitive airdrops or NFT discounts—against historical data before touching mainnet, essentially building a marketing sandbox that behaves like the chain.

You can also treat some DeFi protocols themselves as configurable actuators for your agents: when a cohort’s engagement drops, an agent can automatically reallocate a small budget into a yield‑bearing vault reserved for future incentives. That way, unspent marketing funds don’t just sit idle; they generate yield while waiting for the next high‑intent opportunity. Combined, these nonstandard tools don’t just automate existing workflows; they let your autonomous stack manage capital, risk, and experimentation at a granular level that would be impossible for a human team to maintain manually.

Step‑by‑Step: Designing and Deploying Marketing Agents

1. Define agent roles with surgical precision

Before wiring anything, you need to specify exactly what each agent is allowed to care about and what it may do. Treat every agent like a narrowly scoped colleague: one for cold‑start user acquisition, one for on‑chain retention, one for partner ecosystem activation. Vague “do marketing” instructions create chaotic behavior, especially once agents start acting autonomously across multiple platforms.

This is where you translate marketing strategy into machine‑readable objectives: “Detect wallets that did at least three swaps in the last week but have not used our protocol,” or “monitor DAO voters who haven’t participated in two proposals and send context‑aware nudges.” Each objective becomes a set of triggers, data sources, and permissible actions. By constraining scope, you make it far easier to debug and iterate on behavior, and you reduce the risk of agents spamming channels or overspending budgets under ambiguous instructions.

2. Map the full data and action surface

Once roles are clear, you create detailed maps of both inputs and outputs. On the input side, list the contracts, events, metrics, and social feeds that each agent must understand. On the output side, specify exactly which endpoints it can hit: Discord webhooks, email providers, ad API segments, minting contracts, referral registries. This mapping step is often skipped, but it’s what turns generic LLMs into precise ai agents for crypto project promotion that actually respect your business rules.

A good practice is to design your agents around “skills,” each represented as a well‑documented function: classify wallet behavior, group addresses into cohorts, draft an outreach message, compute incentive amounts, or schedule a post. The agent’s core loop becomes orchestrating these skills based on its goals and the environment state. Because each skill is independently testable, you can iterate on the logic without tearing apart the whole agent. Over time, you get a library of reusable capabilities that any future agent can plug into, greatly speeding up new campaign development.

3. Build an on‑chain aware brain, not just a chatbot

The biggest mistake teams make is treating marketing agents as fancy chatbots. In Web3, your agents must be stateful and on‑chain aware. That means maintaining per‑wallet and per‑community memory, storing it in a vector database or specialized CRM that indexes both on‑chain and off‑chain events. When a wallet returns after months, your agent should instantly remember previous interactions, campaign history, and risk flags, instead of starting every conversation from scratch.

To make this work, combine an LLM with a compact rule layer. The LLM handles fuzzy stuff—tone, context, personalization—while rules enforce hard constraints like “never offer more than X in incentives per address per month” or “only propose NFT rewards after a user passed KYC with the partner.” This hybrid design lets you maintain creativity and adaptability while your compliance and finance teams maintain peace of mind. In other words, the agent thinks probabilistically but acts deterministically where it matters.

4. Connect channels and test in constrained environments

Now you connect your agents to real channels, but gradually. Start in “shadow mode”: they generate actions, but a human approves them. This gives you clean training data on what’s acceptable and surfaces edge cases. Once you’re comfortable, allow partial autonomy in low‑risk domains, like posting in a test Discord channel or sending tiny on‑chain rewards to a small user subset.

A good rollout model is: 1) simulations on historical data, 2) shadow mode with human review, 3) capped autonomous mode with strict budgets and frequency limits, and 4) fully autonomous operations with periodic audits. Throughout this process, log everything the agent sees and does: prompts, function calls, on‑chain transactions, and message IDs. These logs become your best debugging tool later, especially when you start layering multiple agents that may interact in non‑obvious ways across your stack.

5. Close the loop with attribution and learning

Autonomy without feedback just accelerates guesswork. You need a feedback loop where the outcomes of each agent’s decisions feed back into its memory and your analytic models. Use tracking links, on‑chain tags (memo fields, event payloads), and CRM updates to tie each conversion, retention event, or failed attempt back to a specific agent and decision path.

Once the plumbing is in place, start evaluating performance with hard metrics: LTV‑to‑CAC by cohort, reactivation rate, churn reduction, and partner revenue influenced. Feed this data into a lightweight reinforcement learning or bandit framework, so the agent can automatically adjust messaging, incentives, and channel mix. Done properly, you get a marketing system that steadily self‑optimizes, instead of constantly restarting experimentation whenever a new campaign owner joins the project.

Nonstandard Use Cases and Creative Architectures

Agent‑driven, on‑chain referral economies

Traditional referral programs in crypto often devolve into bot farms and Sybil attacks. Autonomous agents give you a way out by designing referral systems as living, adaptive organisms. For example, an agent can continuously scan on‑chain patterns to estimate the “health” of new users that a referrer brings in: do they trade, stake, vote, or simply dump and disappear? Based on this live score, the agent automatically adjusts referral payouts, gradually starving low‑quality funnels and amplifying genuine advocates.

You can take this further by letting agents negotiate micro‑deals with power users in DMs: instead of a static link, a wallet with a strong history might be offered a custom revenue share, temporary commission boost, or access to niche features. The key is that none of this requires your human team to keep spreadsheets of affiliates; the agent uses objective on‑chain data and pre‑defined constraints to shape a referral economy that rewards alignment rather than noise.

Autonomous “micro‑agencies” for DAOs and protocols

Imagine a DAO that doesn’t hire a single full‑time marketer but spins up a small swarm of autonomous agents that collectively behave like a blockchain marketing agency using autonomous agents. Each agent focuses on a specific territory: one is tuned to Asian communities and local platforms, one watches DeFi Twitter and responds in real time, another monitors governance forums and spins up explanatory threads whenever a complex proposal appears.

The twist is that these agents can be controlled and funded through on‑chain governance. Token holders can allocate budgets, adjust guardrails, or even vote to “fire” an underperforming agent and reallocate its functions to a new one. You effectively treat marketing infrastructure as a set of modular, upgradeable smart contracts with AI front‑ends, rather than a single opaque agency contract. This opens up interesting transparency and accountability models that are simply impossible in Web2.

Marketing agents as co‑founders of early‑stage Web3 startups

For early Web3 teams that are primarily engineering‑driven, spinning up agents instead of immediately hiring a large go‑to‑market team can be surprisingly effective. On decentralized marketing platforms for web3 startups, you can deploy agents that watch every on‑chain interaction with your dApp from day one, then automatically segment and nurture users, propose collaborations to other small protocols, and surface real‑time feedback for the core team.

In this setup, your “marketing stack” becomes an always‑on co‑founder that never gets tired of writing docs, answering basic questions, or debugging friction in your funnel. It routes only the most complex, strategic decisions to the human founders. As the project scales, you can gradually replace some of these automated functions with specialized human roles, but by then you’ll already have a data‑rich, battle‑tested system for lead scoring, community education, and cross‑protocol partnerships that no conventional launch agency could have built from the outside.

Troubleshooting and Hard Lessons From Real Deployments

Typical failure modes and how to spot them early

When teams first deploy autonomous ai agents for web3 marketing, a few recurring issues show up. The first is “over‑eagerness”: agents posting too often, reusing similar copy, or handing out incentives too aggressively because the optimization objective is mis‑specified. Another is blind spots: the model interprets on‑chain metrics but misses social context (e.g., a community drama or regulatory announcement) and responds in a tone that feels disconnected or tone‑deaf.

To detect these problems early, monitor leading indicators rather than waiting for a full quarterly report. Track complaint rates in community channels, muted or blocked agent accounts, spam reports, bounce rates, and cost per incremental on‑chain action. If any of these spike, you likely have a configuration or prompting issue. Establish “panic buttons” that instantly pause certain classes of actions (for example, cold outreach or on‑chain rewards) without shutting down the entire system, so you can investigate calmly while limiting blast radius.

Debugging agents that touch both on‑chain and off‑chain systems

Debugging agents that operate across smart contracts and web2 APIs is nontrivial because failures can originate anywhere in the chain. You might see an agent stop sending rewards not because the logic is wrong, but because a gas estimator fails on a particular network configuration, or a third‑party API changed rate limits without notice. When this happens, resist the urge to “just tweak the prompt.” Instead, adopt a structured debugging protocol that mirrors software engineering practices.

1. Reproduce the issue in a controlled environment with the exact input state (wallet balances, contract addresses, API responses).

2. Inspect the agent’s decision trace: model outputs, function calls, error messages, and any fallbacks.

3. Isolate whether the failure is logical (bad strategy), infrastructural (downed service or RPC), or policy‑related (guardrails blocking an action).

4. Patch the smallest layer possible: skill function, rule definition, or infrastructure config.

5. Add regression tests so that future agent updates rerun this scenario automatically.

This iterative, test‑driven approach prevents you from masking deep misconfigurations with superficial prompt changes that only work until the next edge case appears.

Guardrails, ethics, and avoiding reputational blowups

Because marketing agents directly interact with users and manage budgets, they can cause reputational and legal damage if misaligned. You must encode explicit constraints: no making financial promises, no simulating official regulatory guidance, no targeting jurisdictions under sanctions or restricted by your compliance policy. These aren’t just “nice to haves”; they should be enforced at runtime via validation layers that inspect proposed actions before they leave your system.

Plan for worst‑case scenarios upfront. Assume that at some point, your agents will misinterpret a meme, promote a partner that later rugs, or send an offer to a user caught in a hack. Build incident playbooks that specify how to roll back or compensate affected wallets, how to issue public explanations, and how to update your agent’s training data or rules to prevent repetition. In a market this volatile, reputational resilience is as important as conversion rate, and your AI stack needs to reflect that from day zero.

Putting It All Together: A Practical Path Forward

From experiment to core infrastructure

The quickest way to get started is not to “AI‑ify everything,” but to pick one narrow, high‑leverage use case and let an agent own it end‑to‑end. For example, design a retention agent that tracks dormant wallets and sends context‑specific nudges plus targeted on‑chain incentives. Give it a small, clearly defined budget and a limited channel set, then iterate hard on data sources, prompts, and guardrails for a month.

Once that’s stable and delivering measurable impact, you can introduce adjacent agents—partner activation, community onboarding, governance education—reusing your underlying infrastructure and skills library. Over time, your scattered tools and dashboards start to converge into a coherent mesh of web3 digital marketing automation tools where humans define strategy and constraints, and agents do the heavy tactical lifting. The long‑term goal isn’t to replace your marketing team; it’s to give them a continuously learning, on‑chain native exoskeleton that matches the speed and complexity of Web3 itself.