Why AI-assisted governance audits matter in 2025

AI has quietly moved from a nice-to-have tool into the backbone of serious blockchain audit services, especially for projects that handle real money and on-chain governance. Governance here means the full lifecycle of how decisions are proposed, voted on and executed in a protocol: from DAO votes and on-chain councils to parameter changes run by multisigs. An AI-assisted governance audit focuses not only on code safety, but also on power distribution, voting mechanics, incentive design and operational procedures. In 2025, with DAOs managing billions and protocols hard-forking governance logic into L2s and appchains, manual review is no longer enough; teams need continuous, automated insight into how their governance system actually behaves under real-world pressure and adversarial strategies.

Key definitions: governance, audits and where AI fits

To stay on the same page, let’s pin down a few terms. A governance audit is a structured review of rules, processes and technical controls that determine who can change what in a blockchain system and under which conditions. It complements a classic smart contract security audit, which digs into the code for bugs, vulnerabilities and logic flaws. Governance audits track voting power distribution, upgrade permissions, quorum thresholds, veto rights, treasury controls and emergency procedures. AI assistance means using machine learning models, graph analysis and language models to read contracts, proposals and forums, then surface risks and anomalies. Combined, these AI powered blockchain security solutions can simulate attack paths, detect centralization of power and highlight misaligned incentives long before they crystallize into real governance failures.

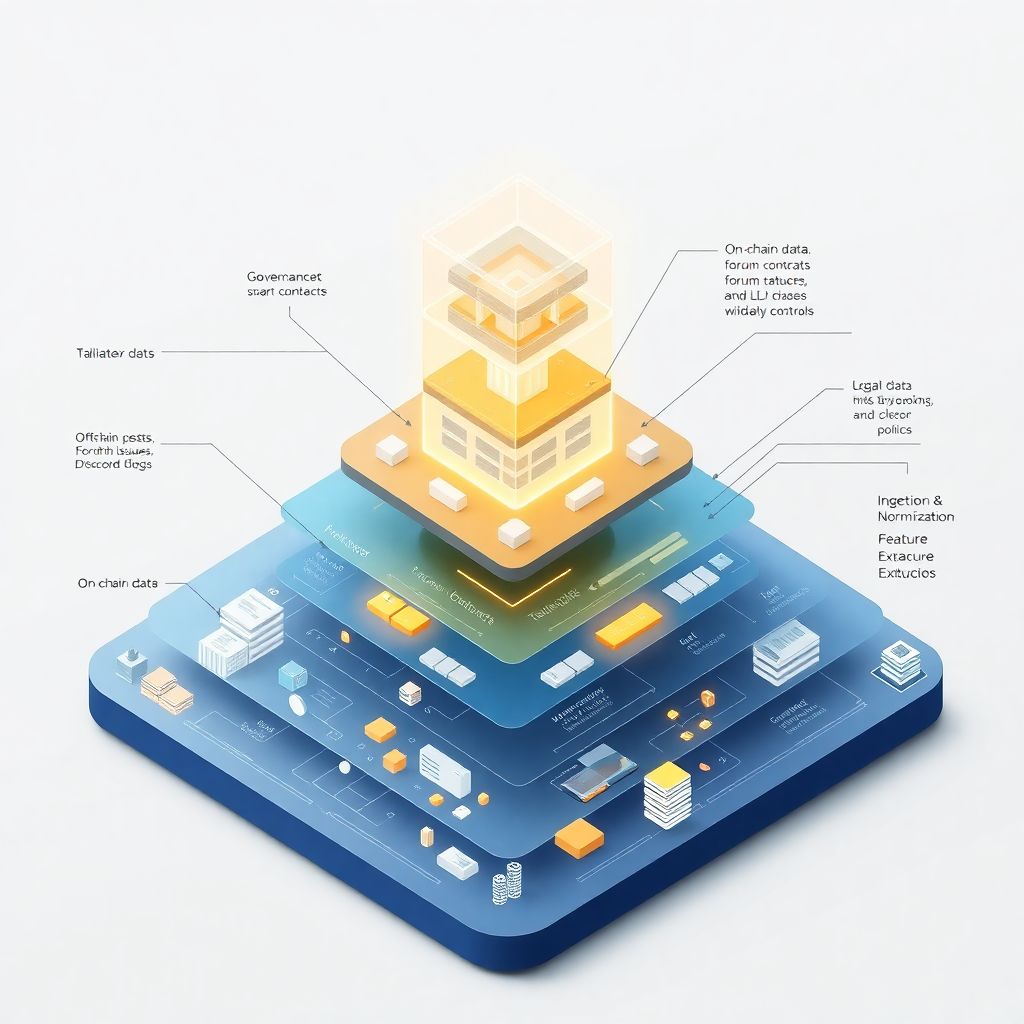

Text-based architecture diagram of an AI-assisted audit stack

Чтобы не ограничиваться абстракциями, представим архитектуру такого решения в виде словесной схемы. [Diagram: Data Layer → (On-chain data: governance contracts, votes, token balances; Off-chain data: forum posts, GitHub, Discord logs; Legal docs and policies) → Ingestion & Normalization Engine → Feature Extraction (voting graphs, role mappings, parameter timelines) → AI Models (LLMs for text, graph ML for power maps, anomaly detection on metrics) → Governance Risk Scoring Engine → Reporting & Dashboard Layer → Users (core team, DAO, regulators, external auditors)]. Такой текстовый «чертёж» показывает, что AI-assisted аудит — это не один волшебный модельный блок, а пайплайн: от сырого ончейн-снапшота и зашумлённой дискуссии в форумах до вполне формализованных индикаторов риска, которые можно отслеживать в дашборде и учитывать при принятии решений.

How AI-assisted governance audits differ from traditional audits

If you’ve ever ordered classic blockchain audit services, you know the pattern: you send scope, auditors review code, you get a PDF with issues and severities. AI-assisted governance audits break this linear rhythm. Instead of a one-off code pass, the audit engine is wired directly to node endpoints, subgraphs and community feeds, creating a living representation of the protocol’s governance over time. Compared to a traditional smart contract security audit, which mainly answers “can an attacker steal funds now?”, AI-driven governance analysis asks, “who can change the rules tomorrow, how quickly, and under what social and economic conditions might they actually do it?”. This dynamic lens reveals slow-moving centralization, cartel formation among validators, or proposal patterns that signal governance capture long before they become headline exploits or regulatory nightmares.

Examples: DAO treasuries, L2 sequencers and validator sets

Let’s ground this in real-world examples. Consider a DAO treasury managing nine digits of stablecoins. Manual auditors can check multisig thresholds and basic role separation, but AI models ingest voting history, forum debate sentiment and social graphs of delegates to detect whether a small clique effectively dictates outcomes. For a rollup with an upgradable bridge and centralized sequencer, governance risk models simulate scenarios where a compromised multisig pushes a malicious upgrade under low voter turnout. In PoS networks, AI can map validator self-bond and delegation patterns across chains to surface hidden control by a single entity. These are the kind of situations where blockchain governance risk assessment services benefit from continuous modeling of actors, incentives and history, rather than an occasional policy review frozen in time and quickly rendered obsolete by changing token distributions.

Compliance angle: regulators, policies and automated checks

By 2025, regulators in major jurisdictions no longer ignore on-chain votes and DAO constitutions; they treat them as part of a project’s internal control framework. Here AI-assisted governance audits intersect with the crypto project compliance audit domain. Instead of manually verifying that each change follows KYC/AML policies or regional restrictions, AI systems parse governance proposals, compare them against regulatory baselines and flag potential breaches. For example, if a DAO vote attempts to whitelist high-risk jurisdictions for fiat on-ramps, the model can highlight conflict with the project’s published compliance policy. This doesn’t replace legal counsel, but it sharply reduces the volume of routine checks and surfaces nuanced edge cases, enabling lawyers and compliance officers to focus on judgment calls instead of copy-pasting through dense governance archives.

AI-powered risk models vs static rulebooks

Traditional governance guidelines usually live in Markdown docs and legal PDFs, implemented as static rulebooks that quickly drift from reality. AI powered blockchain security solutions close this gap by embedding the rules as machine-readable constraints and probabilistic models. Instead of simply verifying that quorum equals 20%, the audit engine tracks how often that threshold is actually met, under which market conditions it fails, and whether a small whale could swing outcomes if liquidity shifts to a new L2. Compared to a static checklist-based review, these models capture emergent behavior: collusion patterns, time-zone gaming of proposal windows, or subtle framing in proposal titles that correlates with voter apathy. Such insights give governance designers concrete levers to tweak, from dynamic quorum to delegated vetoes, rather than vague recommendations to “improve transparency” or “diversify delegates”.

Integrating smart contract security with governance analysis

One of the biggest wins in 2025 is the convergence of code auditing and governance modeling into unified blockchain audit services. Bugs in upgradeable proxies, timelock controllers or access-control lists are no longer treated purely as technical flaws; they are classified by their governance impact. During a smart contract security audit, AI tools map every privileged function—pause, mint, upgrade, change oracle—to actual human or organizational identities behind multisigs and DAOs. The governance audit layer then asks: what is the likelihood that these actors collude, get compromised, or face regulatory pressure? This bridge between “who holds the keys” and “who can vote to change the rules” transforms audits from isolated snapshots into system-level resilience assessments spanning code, people and political economy.

Limitations, biases and practical risks of AI assistance

Of course, AI-assisted governance audits are not magic oracles. The models rely on imperfect data and inherit biases from past governance outcomes, which may favor incumbents or popular delegates. Attackers can attempt to game the system by generating noise in forums, spinning up sybil accounts or staging seemingly benign proposals to mislead anomaly detectors. Another risk is overreliance: teams might treat governance risk scores as absolute truth and ignore contextual judgment from experienced contributors. That is why responsible providers of blockchain governance risk assessment services combine automated scoring with human review, explicit model cards, and clear explanations of limitations. The healthiest setups treat AI as an aggressive assistant that over-flags potential issues, while final decisions remain with multidisciplinary review committees.

Future outlook to 2030: where this is heading

Looking ahead from 2025, the trajectory is pretty clear: AI-assisted governance audits will shift from optional add-ons to default safety infrastructure for serious protocols. In the short term, we’ll see tighter integration between on-chain monitoring, off-chain reputation systems and legal entity records, enabling near-real-time crypto project compliance audit checks. By around 2027, advanced systems will not just flag risks but propose governance patches—suggesting new quorum curves, voting delay parameters or delegation schemes, then backtesting them on historical data. By 2030, mature ecosystems may run “autopilot governance guards” that can temporarily throttle dangerous proposals or require additional review when a model predicts systemic risk. Teams that embrace these tools early will likely experience fewer catastrophic governance failures and more controlled evolution, while those relying solely on manual oversight may find themselves playing catch-up in an increasingly complex multi-chain reality.