Why AI-assisted audit trails on blockchains actually matter

When people talk about blockchains, they usually mention immutability and transparency, as if that magically solves all audit problems. In practice, raw on-chain data is noisy, fragmented across contracts and chains, and hard to interpret. AI-assisted audit trail generation is about turning that chaotic stream of transactions into a clean, navigable story: who did what, when, via which smart contract, and under which business rule. This is where blockchain audit trail solutions become a real part of the operational stack, not just an afterthought for compliance reports.

Instead of engineers manually stitching together events from block explorers and log files, you let AI models classify, correlate and enrich events in close to real time. The result is a human-readable audit trail that can be queried by compliance officers, internal auditors and even product teams, without every question becoming a “please ask the blockchain team” request.

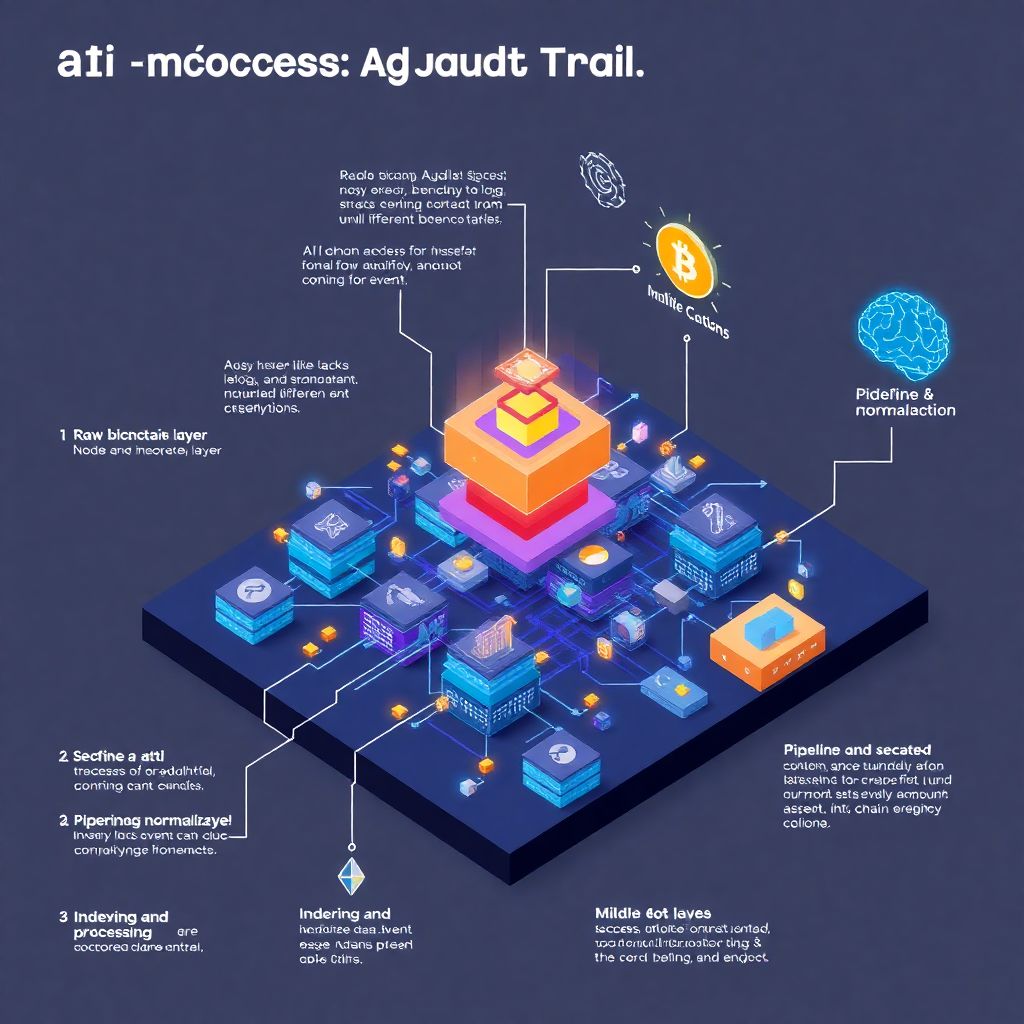

Core building blocks you’ll need

Blockchain data access and indexing

To get any AI-powered pipeline running, you first need reliable, structured data. Directly polling a node with JSON-RPC calls is fragile and slow for serious auditing. A more robust approach is to plug in a dedicated indexer that can decode events, function calls and transfers into a normalized schema. This could be a hosted indexing service, a self-hosted indexer for sensitive environments, or a custom ETL stack pulling from archival nodes. The key is deterministic, replayable access to full transaction histories so your audit trail can be regenerated consistently if rules or models change.

In an enterprise setting, these indexers are often wrapped into enterprise blockchain audit tools that expose APIs and streaming interfaces. That layer should offer filtering by contract, address, topic and block range, plus metadata enrichment (e.g., linking addresses to internal customer IDs). The cleaner and richer this base layer is, the simpler your downstream AI logic becomes – otherwise you’ll waste model capacity on problems better solved by deterministic parsing and schema design.

AI and rules engines as complementary tools

Once the raw data is normalized, the next layer consists of AI models and deterministic rules that collaborate. For well-understood behaviors like “multi-sig wallet approval flow” or “standard ERC-20 transfer,” you can hard-code rules and state machines. For fuzzier tasks – such as mapping arbitrary addresses to user segments, detecting unusual patterns, or assigning semantic labels to on-chain actions – AI models shine. Treat them as classifiers and explainers sitting on top of your structured stream, not as magical black boxes trying to understand raw hex.

The most practical stack is usually a hybrid: a rules engine for clear-cut logic and a small collection of specialized models for ambiguous mapping tasks. Instead of an all-purpose large language model glued to a node, you structure your pipeline so models have access to context (decoded parameters, known business objects, historical activity) and output structured labels your audit engine understands. That way, any AI powered blockchain compliance software you build or buy can produce consistent, queryable fields rather than only free-form text descriptions.

Storage, search and governance layer

A usable audit trail is only as good as its persistence and query layer. You’ll need a storage backend – usually a combination of columnar stores or time-series databases for events, and a document store for enriched entities and narratives. On top of that sits a search interface that compliance and risk teams can actually use, with filtering capabilities by user, wallet, asset, jurisdiction, policy ID and severity. Plan for retention and versioning: audit records need to be immutable in practice, with append-only corrections that track when AI labels were updated.

Because audits often span multiple departments, your governance layer matters too. Define who can configure new detection rules, approve model changes, or override mis-labeled events. Many blockchain governance and audit services fail not because of bad models, but due to unclear ownership. Tie every configuration change to a ticket or change request and preserve that metadata with the audit trail itself, so you can later explain why certain alerts started or stopped appearing at a given time.

Step-by-step: from raw chain data to AI-driven audit trails

Step 1: Define audit objectives and scope

Before writing any code, get precise about what you need to prove and to whom. Are you building an internal control environment, preparing for financial statement audits, or targeting regulatory oversight? Each scenario drives different requirements for granularity, latency and evidence. List out your critical business flows – deposits, withdrawals, liquidations, governance votes, NFT mints – and decide which ones must have a provable, replayable trail with AI classifications layered on top.

At this stage, also identify your data boundaries. Will you only ingest your own smart contracts’ events, or do you need to follow assets across external protocols? Do you need to enrich on-chain events with off-chain context like KYC profiles, customer support tickets or internal ledger entries? These questions affect how you model entities like “customer,” “position,” and “asset” and what your AI models will be expected to infer when generating higher-level event types from generic transfers and calls.

Step 2: Wire up indexing and event normalization

The next step is operational: connect to your chosen chains, spin up indexers, and define your canonical data model. For each smart contract you care about, configure ABI decoding so method calls and events are stored with human-meaningful field names. Normalize repeated patterns, such as a generalized “asset movement” schema that can represent both token transfers and internal accounting entries. This is where many attempts fail by skipping the schema design and throwing everything into a generic blob field for the AI to interpret later.

Your goal is to express every low-level transaction as a structured event: who initiated it, what parameters were passed, what state changes occurred, and which higher-level business object it might relate to. This is still a deterministic process; you are not yet invoking models. Think of it as building the raw “ledger” from the chain. The cleaner this layer is, the more reliable the AI-assisted audit trail generation will be, since models will receive events with explicit semantics instead of needing to reverse-engineer meaning from raw data.

Step 3: Introduce AI classification and correlation

Now you add the AI layer. Typical tasks include classifying event types (“user deposit,” “liquidation,” “bridge withdrawal”), correlating multiple transactions into one logical operation, and flagging anomalies compared to historical behavior. For example, a bridge withdrawal might involve several internal calls and transfers; the model can assign a single “operation ID” to all of them and label that sequence as one action in the audit trail. This significantly improves readability and speeds up investigations.

Implement the models as services that consume normalized events and output structured tags, risk scores and narrative snippets. For real-world workloads, you might use smaller, fine-tuned models for classification and a separate model for free-text explanations where needed. Keep every model output versioned and link it to the model configuration so that if you retrain or upgrade, you can still reproduce previous results. That discipline is critical if an external auditor later asks why some events changed category between reporting periods.

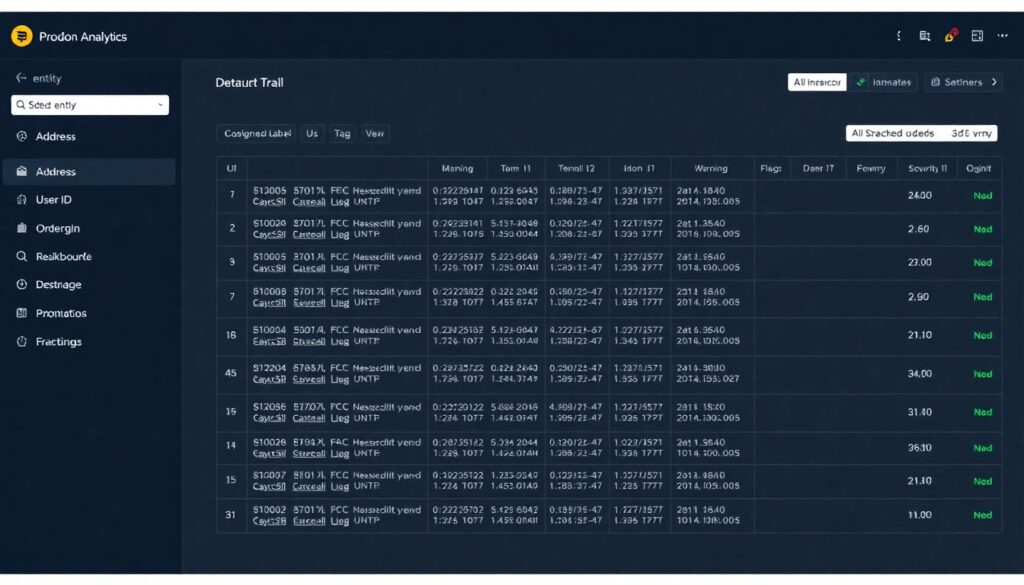

Step 4: Build the human-facing audit view

Even the most accurate models are useless if the output is hard to consume. Design an interface where a user can select an entity (address, user ID, order ID) and see a chronological, enriched audit trail: raw events, AI-assigned labels, rule-based flags and links out to block explorers for independent verification. Make every step drillable; a compliance officer should be able to click into an event and see all supporting on-chain data plus any off-chain metadata used by the model.

This is also a good place to surface automated blockchain transaction monitoring outputs. Instead of siloed alert dashboards, embed alert markers directly into the timeline so you can see anomalies in their full context. From here, your team can export evidence packages – including hashes, decoded payloads and AI explanations – whenever an incident, dispute or formal audit occurs. Over time, you’ll likely add saved views, scheduled reports and case management integrations so investigations move smoothly from detection to resolution.

Step 5: Integrate with your control and compliance processes

Finally, plug this audit trail engine into broader risk and compliance workflows. Map AI-detected event types and anomalies to existing internal controls – for example, segregation-of-duties checks or thresholds for large transactions. Connect the system with your ticketing platform so that certain alerts automatically generate review tasks, and store the results of those reviews alongside the underlying events. The audit trail then becomes a living system of record not only for on-chain actions but also for the organization’s responses to them.

At this point, what started as a technical project evolves into a strategic control layer. Your setup may still rely on the same enterprise blockchain audit tools and log pipelines as before, but now they operate with higher-level semantics, helping you justify risk-weighted decisions and demonstrate due diligence. When external auditors or regulators show up, you can walk them through a coherent end-to-end view rather than static exports and ad-hoc spreadsheets, which sharply reduces friction and review time.

Typical tools and components in a practical stack

Off-the-shelf platforms vs. custom builds

Teams often face a choice: build a tailored pipeline or adopt a ready-made platform. Commercial products increasingly brand themselves as AI powered blockchain compliance software, bundling node connectivity, indexing, AI classification and dashboards in one package. These can be compelling if you need fast deployment across several chains and don’t have strong in-house data engineering. However, flexibility and data residency requirements may push you toward a more modular architecture that your team fully controls.

When evaluating tools, focus on their ability to integrate with your existing identity and accounting systems, expose stable APIs and support custom rules and models. Vendor lock-in becomes a serious issue if you cannot export enriched audit data in a structured, portable form. In heavily regulated sectors, scrutinize how the provider handles model updates, evidence retention and explainability. You want clear commitments on how long logs are stored, how versions are tagged and how you can reproduce past inferences if challenged by an external authority.

Key components you’ll likely combine

You don’t need to adopt a monolithic platform; many teams assemble a best-of-breed toolchain around a few core components:

– Node and indexing infrastructure (self-hosted or managed) for reliable historical and real-time data.

– Data pipelines (streaming and batch) to normalize events, enrich with off-chain data and route to storage and AI services.

– AI inference layer, including lightweight classifiers, anomaly detectors and optional generative models for narrative explanations.

On top of this, you add access control, audit logging and monitoring for the pipeline itself. It’s easy to forget that the system generating audit trails needs its own auditability. Make sure configuration changes, model deployments and data access are all logged with the same rigor as on-chain events. Over time, this meta-trail becomes invaluable when you need to prove that your own audit infrastructure has not been tampered with and that alerts were handled according to policy.

Troubleshooting and hard lessons from real deployments

Dealing with noisy or incomplete data

One of the first issues teams run into is data quality. Smart contracts might emit inconsistent events, proxies can obscure the real logic, and legacy deployments sometimes lack ABIs. When your AI models see inconsistent inputs, they tend to overfit to quirks or simply misclassify. To counter this, invest early in data validation: schema checks, contract-specific parsers and backfilling routines that correct past anomalies. It’s easier to harden the ingestion layer than to make models “robust” to every possible inconsistency.

In multi-chain environments, subtle differences in transaction semantics can cause silent errors. For instance, gas refund behaviors or fee structures might influence how you interpret “successful completion.” If you port rules from one chain to another without adjustment, you may end up mislabeling entire classes of events. Build chain-awareness directly into your normalization logic and make sure the AI receives a “chain type” or similar feature so it can distinguish patterns properly. Run frequent cross-checks against block explorers to catch divergences early.

Preventing AI hallucinations and overreach

Another recurring problem is over-reliance on generative models for tasks better handled deterministically. If you ask a large model to infer complex business logic or regulatory status from raw bytecode, you’re inviting hallucinations. Keep generative components constrained to descriptive tasks like summarizing an already-detected pattern or explaining a rule that fired. For critical classifications, apply supervised models trained on curated datasets or rule-based systems where the logic is transparent and testable.

You also need a clear escalation path for low-confidence inferences. Instead of silently accepting uncertain outputs, configure your system to flag them for manual review or fall back to a “generic” category. Over time, you can use those ambiguous cases as labeled training data to improve future versions. This feedback loop stabilizes the system and gradually shifts manual effort from routine classification to edge cases where human judgment genuinely adds value.

Handling performance, scale and cost

As volume grows, running models on every single event can become expensive or slow. To keep latency and cost under control, introduce a tiered processing model. Use simple filters to discard or aggregate low-risk, high-frequency events before they reach heavy AI components. For example, small internal transfers between known, low-risk entities might only receive minimal tagging, while large or unusual operations trigger the full analysis pipeline with deep inference and anomaly detection.

– Batch-process historical data for backfills or periodic compliance reports.

– Reserve real-time AI analysis for scenarios where immediate reaction matters, such as fraud detection or sanctions screening.

– Profile each part of the pipeline regularly to find bottlenecks, from node latency to model throughput.

In some environments, it’s efficient to bring the models closer to the data by running them near your storage or indexing layer, reducing data transfer overhead. As you tune this architecture, continuously monitor accuracy metrics along with cost and performance; subtle optimizations can degrade detection quality if not measured properly. Maintaining this balance is an ongoing engineering task, not a one-time setup.

Making AI-assisted audit trails a reliable part of your stack

Done thoughtfully, AI-assisted audit trail generation turns raw blockchain data into a continuous, explainable story of system behavior. Instead of treating audits as periodic, painful events, you get a living view of your protocol or application that supports operations, compliance and incident response simultaneously. The combination of deterministic parsing, clear schemas and targeted models gives you both the rigor auditors expect and the flexibility engineers need.

As your system matures, consider periodic independent reviews or partnerships with external specialists who provide blockchain governance and audit services. They can validate your assumptions, stress-test your models and help align your evidence structure with regulatory expectations. Over time, the organizations that integrate these practices deeply into their infrastructure will be better positioned to withstand scrutiny, ship faster with confidence and scale on-chain operations without losing control over what actually happens on their ledgers.