AI-driven consumer protection in digital markets

From early online fraud to AI‑driven shields

Короткая история: от баннеров до маркетплейсов

In the late 1990s online shopping was basically a wild west with bright banners, primitive carts and almost no real safeguards. Card scams, fake auctions and spammy “download now” buttons flourished because rules were slow and manual checks simply didn’t scale. During the 2010s, marketplaces and app stores exploded, and regulators started to push harder, but most checks were still handled by people reviewing complaints after the damage was done. By 2025, the volume of digital transactions is so huge that only automated, AI‑driven consumer protection in digital markets can keep up with new scam patterns, dark patterns and cross‑border fraud.

Почему именно сейчас без ИИ не обойтись

Back in 2015 a fraud team might review a few hundred suspicious orders per day. Today, a mid‑size marketplace easily processes millions, with bots probing return policies, promo codes and chargebacks every minute. Scammers also use generative AI to craft realistic phishing emails, deepfake support chats and fake merchant profiles. Human reviewers alone simply can’t track all this in real time. That’s why companies turn to AI consumer protection solutions for digital markets: models that digest logs, support chats and transaction streams in seconds, flagging abnormal behavior before users even realize something is off and giving regulators data they can actually work with.

Necessary tools for AI‑driven consumer protection

Данные, инфраструктура и “гигиена”

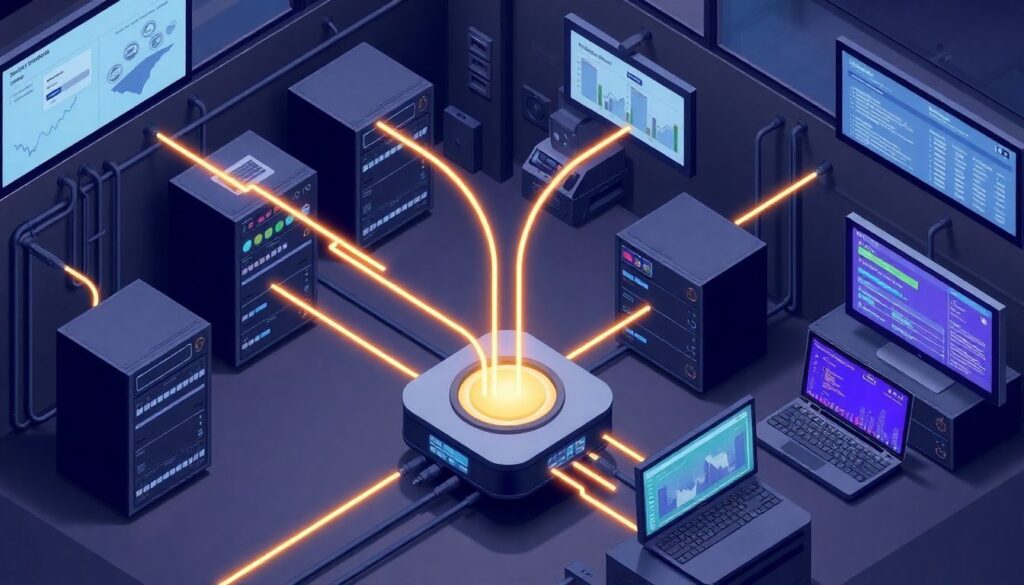

Before thinking about fancy algorithms, you need boring but critical plumbing. Start with reliable data pipelines from payment gateways, clickstream analytics, customer support, identity verification and dispute systems. Log events in a unified format and keep consistent user and merchant IDs, otherwise the best model will stare at chaos. Cloud platforms with scalable storage and GPUs help you react to seasonal spikes. Just as important are solid access controls and encryption; nothing kills trust faster than a “protection” system that leaks data. Good data hygiene is the quiet backbone of every robust AI risk management system for online marketplaces and fintech apps.

Ключевые ИИ‑инструменты и сервисы

Once the basics are ready, you can pick specialized tools instead of reinventing the wheel. Many teams start with AI fraud detection software for ecommerce and online platforms that plugs into checkout, account creation and payout flows through APIs. On the compliance side, AI compliance tools for digital market regulation help scan product listings, ads and contracts for misleading claims or hidden fees. Large language models assist support agents by summarizing complaint histories and suggesting fair resolutions. Finally, an AI-driven consumer rights monitoring platform can aggregate alerts from multiple channels, spot systemic abuses and produce reports tailored for regulators and internal risk committees.

Step‑by‑step process to launch a protection program

Подготовка: понять риски и цели

Before you open any dashboard, map real‑world harm. Look at chargebacks, complaint categories, negative reviews and regulator letters from the past two years. Separate annoyances (slow delivery) from true consumer harm (unauthorized charges, unfair subscription traps, counterfeit goods). Then define success metrics: reduction in fraud losses per transaction, faster complaint resolution, fewer compliance breaches. Align legal, product, support and data teams so everyone agrees what “protection” means. Without that shared picture, you’ll end up with a fancy system that optimizes abstract scores instead of defending actual people using your platform daily.

Поэтапный запуск: от пилота к масштабированию

Here’s a practical way to roll out AI safeguards without blowing up production:

1. Start with a sandbox. Feed historical data into candidate models and compare their output with past fraud decisions and complaint outcomes.

2. Run in “shadow mode”. Deploy models to live traffic but let humans still make final calls, measuring how often AI agrees with experts.

3. Introduce soft actions. Use AI scores to trigger step‑up verification, extra explanations or manual review queues instead of outright blocks.

4. Scale and refine. Gradually allow higher‑impact actions once you’re confident in fairness, false‑positive rates and clear appeal paths for honest users.

Troubleshooting and continuous improvement

Типичные проблемы и как их диагностировать

The first headache is usually false positives: real customers suddenly blocked from ordering or withdrawing money. Track such cases as a specific metric and review them weekly with cross‑functional teams. If one demographic or country is over‑blocked, your training data may be skewed and needs rebalancing. Another frequent issue is concept drift: scammers change tactics, and last year’s patterns become useless. Monitor model performance over time; when precision drops, retrain with fresh incidents. Finally, build a simple feedback button into internal tools so support and risk analysts can flag “AI got it wrong” cases that feed back into future iterations.

Баланс между защитой, приватностью и прозрачностью

Consumers and regulators in 2025 expect more than just “the algorithm said so”. Document how each model is used, which features it relies on, and what decisions stay in human hands. Offer users short, plain‑language explanations when their transaction is delayed or additional checks are required, and provide an appeal route with real people. Coordinate with legal teams so your systems align with upcoming AI laws and evolving consumer‑protection rules in different regions. When handled this way, AI doesn’t replace traditional oversight but becomes a powerful ally that keeps digital markets open, innovative and genuinely safe for ordinary buyers and sellers.