Why On‑Chain Analytics Needs a Rethink

From “Surveillance” to “Stewardship” of Data

Today, a lot of blockchain analytics looks like mass surveillance with a friendly UI. Wallet clusters, transaction graphs, risk scores — all usually computed by pulling data off‑chain into huge proprietary databases.

That model is hitting a wall. Regulators want more transparency; users want more privacy; DeFi protocols want granular insights without deanonymizing everyone. This tension is exactly where AI-enabled privacy-preserving analytics on-chain comes into play: keep the data on-chain or cryptographically protected, run smart analytics anyway, and only reveal what’s necessary.

In other words, move from “tell me everything about that wallet” to “prove to me what I need to know, without exposing the rest”.

—

Tech Building Blocks: How Does This Even Work?

Confidential Computing + ZK + AI: The Unlikely Trio

A practical stack for ai privacy preserving blockchain analytics tends to blend three families of technologies:

– Confidential computing: TEEs (SGX, SEV, Nitro Enclaves) where code runs in an isolated, hardware-protected environment.

– Zero‑knowledge proofs (ZKPs): prove a statement about data without leaking the data itself.

– Model architectures tailored to encrypted / constrained data: compact GNNs, tree‑based models, or quantized transformers.

A realistic on-chain flow might look like this:

1. Raw transaction traces and state deltas flow into a TEE.

2. An AI model computes risk, behavior clusters, or credit scores.

3. The enclave outputs:

– an encrypted result viewable only by the entitled party, and

– a ZK proof that “the score was computed correctly using model X on dataset Y”.

Nothing leaves the enclave in the clear, yet the chain (or a regulator) can verify the computation.

On-Chain vs Off-Chain Is the Wrong Question

Most teams still argue “on-chain vs off-chain analytics”. A better framing:

What is verifiable and what is linkable?

– Verifiable: can we be sure the analytics result wasn’t tampered with?

– Linkable: can someone correlate the result back to a specific identity or full history?

The goal for an on-chain data analytics platform with ai is:

– results are *verifiable,*

– data is *minimally linkable*.

This may mean: commitments and proofs live on‑chain, while raw data never does; or models run inside decentralized TEEs whose attestation is anchored on-chain.

—

Hard Numbers: Where We Are and Where We’re Heading

Current State (Approximate, But Grounded)

Open finance generates a staggering amount of observable data. By 2024:

– Public blockchains regularly process 20–30 million transactions per day across major L1/L2s.

– Chain analytics and compliance tooling is already a $300–400M annual market, growing fast.

– Yet, less than 10–15% of that market meaningfully uses privacy-preserving methods (ZK, TEEs, homomorphic techniques) in production; most are still batch ETL + SQL + heuristics.

So, most “AI analytics” for DeFi is still traditional ML running on de‑anonymized, off-chain copies of on-chain data.

Forecasts: Why the Curve Bends Upwards

Given regulatory pressure and institutional onboarding, conservative forecasts from fintech research shops suggest:

– The broader blockchain data/analytics market could exceed $2B by 2030.

– Privacy-first solutions are likely to capture 30–40% of new spend once MiCA-style and FATF-style rules meet data-protection laws like GDPR.

– Confidential computing in financial services is projected to grow at 25–30% CAGR over the decade, and Web3 tends to piggyback on those enterprise-grade investments.

That’s not “maybe one day”; that’s “budget cycles are about to demand auditable privacy by default”.

—

Economics: Who Actually Pays, Who Actually Benefits

The Invisible Tax of Bad Privacy

Lack of privacy-preserving analytics is already expensive:

– DeFi protocols over-block whole regions or high‑risk countries because they can’t do granular, privacy‑safe checks.

– Market makers pay “information rents”: they give away alpha because they reveal too much trading logic when seeking advanced analytics support.

– Retail users price in “surveillance risk” by preferring fresh wallets, mixers, or privacy-preserving chains, fragmenting liquidity.

In practice, this is a hidden drag on TVL and user growth.

How Money Flows in a Privacy‑First Analytics Stack

A viable enterprise blockchain analytics solution with confidential computing creates new fee lines instead of only cost centers. For example:

– Per‑query fees: Pay to run a confidential risk assessment, accrue fees to the protocol and the node operators running enclaves.

– Model-as-a-service: AI models for fraud detection or credit scoring licensed to DAOs and fintechs, but executed privately.

– Data DAO dividends: communities contributing labeled data (e.g., fraud flags) to a privacy-preserving pool can share in revenues when that dataset powers compliance or risk products.

This turns compliance from a pure cost into a revenue-sharing data ecosystem, while still satisfying regulators.

—

Industry Impact: DeFi, Institutions, and Regulators

DeFi: From “Anonymous” to “Prove‑You‑Are‑Safe‑Enough”

A lot of secure on-chain ai analytics for defi and web3 will feel like “soft KYC”: you don’t expose your passport, but you prove properties about yourself or your transaction history. Think:

– “This wallet has never touched sanctioned addresses.”

– “This address has maintained >X collateral ratio over the past year.”

– “The user behind these addresses is in an allowed jurisdiction and not on any watchlist.”

All provable using privacy preserving ai tools for blockchain compliance that run pattern recognition and risk scoring over encrypted histories, then generate succinct proofs.

This allows:

– Higher leverage for provably reliable users.

– Lower fees for “good actors” measured via private behavioral scores.

– Permissioned pools that remain fully on‑chain while respecting privacy rules.

Institutions: Institutional-Grade Without Sacrificing Secrecy

TradFi players won’t move serious volume if every strategy leak is one analytics query away. With robust ai privacy preserving blockchain analytics, an institution can:

– Prove to a counterparty that its collateral is clean without revealing exact wallet structures.

– Demonstrate adherence to internal risk policies via ZK attestations generated by on-chain or enclave-based AI models.

– Outsource analytics to specialized providers without those providers ever seeing the raw data in cleartext.

That’s a huge shift from NDAs and walled gardens to cryptographic guarantees.

—

Non‑Obvious Design Patterns and Unconventional Ideas

1. Negative Information Markets

Instead of selling “who this address is”, analytics providers sell who this address is *not*.

For example:

– Not linked to these specific sanctioned entities.

– Not part of these fraud clusters.

– Not under these risk thresholds.

This can be encoded as ZK proofs on-chain, where the model runs in a TEE and only reveals set‑membership negatives. Regulators and protocols get safety guarantees; users keep their graph structure private.

2. Ephemeral Insight Tokens

Imagine a system where every analytics query mints a short‑lived, non-transferable token representing the *right to see* a piece of insight:

– A protocol requests a risk score.

– An enclave computes it and mints a token giving UI access to the result for, say, 30 minutes.

– After expiry, only a hash of the result and a proof remain on-chain.

This prevents “analytics exhaust”: permanent data trails that future adversaries could mine. You get verifiability through the proof, but the human-readable output decays.

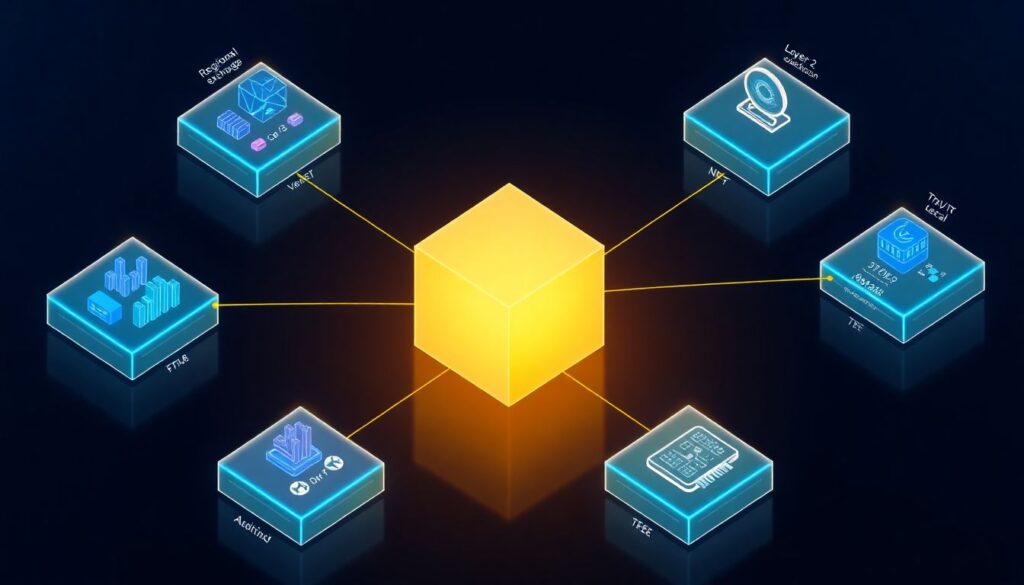

3. Federated On‑Chain Learning

Instead of centralizing all transaction data in one mega‑dataset, you can build a federated network of analytics nodes:

– Each node has partial data (regional exchange, L2, or vertical like NFTs).

– Nodes train local models inside TEEs.

– Periodically, they aggregate gradients or model deltas into a global model, again secured by confidential computing and ZKPs to prevent poisoning.

This yields a global fraud or risk model without any single actor ever holding the full raw dataset. Perfect match for jurisdictions that are strict about data localization while still wanting global AML intelligence.

4. “Explainable‑Enough” Privacy

Regulators don’t just want scores; they want reasoning. The problem: explanations leak data. Novel compromise:

– The model generates an internal explanation graph.

– A second model compresses that graph into high-level “reasons” (e.g., “pattern similar to 95% of known mixer‑exit scams”),

– Then a ZK proof confirms that these reasons faithfully reflect the underlying explanation graph, without revealing full topology.

So you get *explainable‑enough* AI without handing over the whole transaction graph.

—

Architecture Sketch: Putting It All Together

Layers of a Privacy‑Preserving Analytics Stack

A practical blueprint for an on-chain data analytics platform with ai could look like this:

– Data layer

– On-chain events, logs, and state deltas.

– Off-chain KYC and sanctions data, encrypted and linked via pseudonymous identifiers.

– Confidential compute layer

– TEEs executing AI models on combined data.

– Attestation proofs anchored on-chain so anyone can verify the correct enclave & code version ran.

– ZK verification layer

– Circuits that verify scores, risk tags, or behavioral metrics were correctly computed without revealing inputs.

– Access & policy layer

– Smart contracts defining who can request which analytics, under what conditions.

– DAO‑governed policies that can be updated without overhauling the cryptography.

Each layer is independently upgradable. You can swap in a better model, change a compliance rule, or move from SGX to a new enclave technology without throwing everything away.

Governance: Who Controls the Models?

The scary scenario is one vendor owning the “truth model” of risk on-chain. To avoid that, protocols can:

– Maintain open‑spec models whose weights are auditable but encrypted in enclaves during execution.

– Allow multiple competing model providers; requesters choose which one to use.

– Token‑govern model upgrades: stakeholders vote on weight changes or new architectures after testing on synthetic and red‑teamed datasets.

This democratizes analytics instead of turning it into a new centralized choke point.

—

Strategic Takeaways for Builders and Policymakers

For Builders

If you’re designing analytics-heavy Web3 products, consider these early:

– Treat privacy as a *design constraint*, not an afterthought.

– Bake in TEEs or ZK‑friendly architectures from day one; retrofitting is painful.

– Separate *what must be provable* from *what must remain unlinkable*. That distinction will shape your contracts and data schemas.

And don’t aim for “perfect privacy” on day one. Aim for measurable privacy improvements with verifiable guarantees, then iterate.

For Regulators and Enterprises

A modern enterprise blockchain analytics solution with confidential computing gives you something you never had in legacy finance:

cryptographic assurances that obligations are met without bulk data transfers.

You can:

– Request proofs instead of raw logs.

– Audit the models that generate those proofs.

– Set clear, code‑enforced boundaries on what can be learned and retained.

This turns privacy and compliance from opposing forces into code that can be tested, reasoned about, and improved over time.

—

Closing Thought

AI-enabled privacy-preserving analytics on-chain isn’t about hiding bad behavior; it’s about proving good behavior with less collateral damage. The stack is finally mature enough — confidential computing, ZK, and specialized models — that we don’t have to choose between “total transparency” and “total opacity”.

The next competitive edge in Web3 won’t just be faster blocks or deeper liquidity; it will be who can extract the most insight from on-chain activity while revealing the least about any individual user.