Why autonomous scaling matters for AI‑enabled blockchains

AI-enabled blockchains are noisy neighbors.

Models spike GPU usage, off-chain workers hammer RPC endpoints, and on-chain contracts start emitting way more events than the original architects expected. If you scale this manually, you’re firefighting all day.

Autonomous platform scaling is about letting the infrastructure watch itself, make decisions, and react in real time—without a human SRE sitting with a pager 24/7. It’s the difference between “we’ll survive the next launch” and “we can run 50 AI workloads on-chain without losing sleep”.

In this article we’ll walk through:

– What “autonomous platform scaling” actually means in the context of AI-enabled blockchains

– How a modern AI blockchain infrastructure scaling solution is usually wired

– Real-world style case stories from teams that hit scaling walls

– Trade-offs vs more traditional cloud and Web3 setups

I’ll keep the tone informal but the content technical and practical.

—

Core concepts: what are we really scaling?

Heterogeneous workload: chain + AI + glue

When people say AI-enabled blockchain, in practice it’s a stack of very different components:

– Blockchain layer

– Full nodes, validators, indexers, event listeners

– AI layer

– Model inference APIs, vector databases, feature stores, GPU workers

– Glue layer

– Oracles, relayers, bridges, off-chain computation frameworks (e.g., custom rollup sequencers, zk-provers)

Autonomous scaling has to juggle all three at once. It’s not enough to add more nodes: if you scale the chain but not the GPUs that serve on-chain AI calls, your latency explodes and you effectively DoS yourself.

Working definition

Let’s pin down some terms we’ll use consistently:

– Autonomous platform scaling — a control plane that continuously monitors metrics (load, latency, costs, failures) and automatically adjusts resources (nodes, GPUs, storage, bandwidth) based on policies. No manual clicking in dashboards.

– AI-enabled blockchain — any chain or L2 where AI inference, training, or data processing is a first-class citizen, not a side script. Think: AI oracles, on-chain model marketplaces, or smart contracts that delegate logic to ML models.

– Autonomous blockchain scaling platform for AI applications — a full-stack environment (often a specialized PaaS) that ships the chain infra, AI infra and scaling logic as one package.

If your team is gluing together random cloud services by hand, you don’t have an autonomous platform yet—you have a pile of YAML with dreams.

—

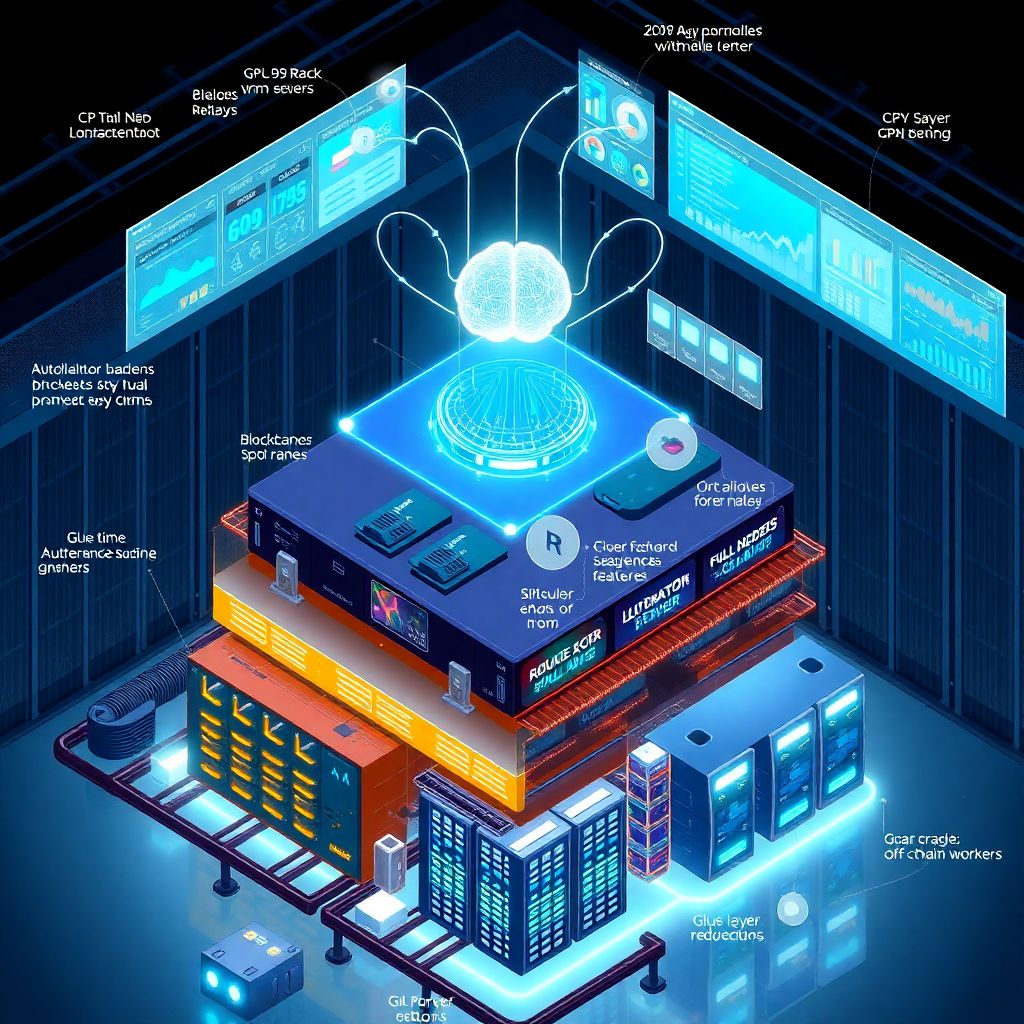

How autonomous scaling typically works (high level)

Text diagram: control loop

Imagine the platform as a control loop:

1. Observe

– Collect metrics: CPU/GPU usage, mem, latency, queue lengths, block times, mempool size, TPS, gas usage, model error rates.

2. Decide

– Policy engine analyzes metrics and SLOs (e.g., “block time < X ms”, “inference latency < 500 ms p95”).

- It predicts demand using historical data and AI-driven forecasting.

3. Act

– Scale up/down nodes, GPU instances, RPC endpoints.

– Reroute traffic across regions.

– Reconfigure rollup parameters or off-chain workers.

In “diagram in words” form:

“`

[Users & Apps]

|

v

[Traffic: tx + AI calls] –> [Telemetry Collectors] –> [Metrics Store]

|

v

[Policy & Forecast Engine]

|

v

[Orchestrator & Provisioning Layer]

/ |

v v v

[Blockchain Nodes] [AI Inference Workers] [Storage / Indexers]

“`

The AI piece is crucial: the same techniques we use for load prediction in web apps now drive when to add validator nodes or spin up extra GPU shards.

—

Case 1: AI oracle startup that outgrew manual scaling

The situation

A small team ran an AI oracle service:

smart contracts submitted requests; off-chain workers fetched data and ran NLP models to return sentiment scores on-chain.

Their early stack:

– 3–5 RPC nodes behind a basic load balancer

– 4 GPU instances for inference

– Manual cloud scaling using dashboards and scripts

They were effectively providing AI-enabled blockchain cloud hosting services to their own product: a private mini-chain plus oracles.

During market spikes, the AI requests tripled in minutes. Engineers tried to scale:

– They added more GPUs reactively (too late).

– They upgraded some nodes instead of adding more, which caused longer restarts.

– Latency p95 went from ~300 ms to > 2 seconds; on-chain timeouts started kicking in.

Moving to an autonomous scaling platform

They didn’t want to become infra experts, so they adopted a scalable AI blockchain platform as a service from an enterprise AI blockchain infrastructure provider.

Key changes:

– Metrics-first:

– Integrated a proper metrics stack with per-contract and per-model dashboards.

– Fed these metrics into an autoscaler that could differentiate between “AI load” and “chain load”.

– Separate scaling domains:

– AI workers scaled by GPU utilization and inference queue length.

– RPC nodes scaled by tx throughput and mempool size.

– Indexers scaled by backlog of unprocessed blocks.

– Predictive bursts:

– The platform’s policy engine learned typical market-event patterns.

– During news releases, it preemptively added GPUs and nodes.

After rollout:

– Inference latency p95 stabilized under 400 ms even under 5× traffic spikes.

– Engineers stopped doing manual “scale-up” during every major announcement.

– Cost optimized by aggressively scaling down overnight in low-traffic regions.

The big win: no heroics required from the infra team; the AI blockchain infrastructure scaling solution did most of the heavy lifting.

—

Core building blocks of autonomous scaling

1. Metrics and signals (the observability layer)

Autonomy lives and dies with observability. At minimum you need:

– Node-level:

– CPU, memory, disk I/O, network

– Block time, peer count, orphaned blocks

– Transaction-level:

– TPS, gas usage, mempool length, failed tx ratio

– AI-level:

– GPU utilization, VRAM usage

– Inference queue length, p95/p99 latency

– Model-specific error rates or timeouts

Useful extra signals:

– On-chain events that imply upcoming AI load

(e.g., specific contract emits “BatchPredictRequested”)

– Off-chain business signals (marketing campaigns, scheduled launches)

Without these, your scaling logic is blind and just guesses.

2. Policy engine and decision logic

The policy engine transforms raw metrics into actions.

Typical policies:

– Threshold-based

– “If GPU utilization > 70% for 5 minutes and queue length > 100, add 2 workers.”

– “If block time > 2× target for 10 minutes, add a validator / sequencer node.”

– SLO-driven

– “Keep on-chain AI calls under 500 ms p95 in region X.”

– “Keep RPC error rate below 0.5%.”

– Predictive

– Use time-series models to forecast next 15–60 minutes of load.

– Pre-scale before big events instead of reacting after users feel pain.

Where AI actually helps:

– Forecasting workloads based on historical usage and external signals.

– Detecting anomalies (sudden, suspicious traffic) and distinguishing DDoS from legitimate spikes.

—

Case 2: L2 rollup with embedded AI features

The situation

A rollup team built an L2 that allowed smart contracts to call AI models directly via precompiles. Internally:

– On-chain contracts issued “AI call” instructions.

– A specialized off-chain executor read those calls, routed them to GPU clusters, and posted results back.

They initially treated this like any other rollup: just add more sequencers and provers when traffic grows. That worked until a popular game launched:

– Users spammed in-game AI assistants for hints and generated content.

– The L2 sequencer kept up—but the AI workers didn’t.

– Result: high “AI call pending” counts, user-facing lag, complaints.

Autonomous scaling redesign

They re-architected their operational stack as a mini autonomous blockchain scaling platform for AI applications:

– Decoupled scaling axes

– Sequencers scaled horizontally based on TPS and block size.

– AI worker pools scaled on AI-specific metrics like inference queue depth and active sessions.

– Circuit breakers and backpressure

– If AI infrastructure was overloaded:

– Contracts got a clean “temporarily unavailable” status code.

– Oracles paused accepting new AI-intensive jobs rather than letting queues explode.

– Data gravity-aware placement

– AI workers were deployed closer (network-wise) to the rollup batch poster to reduce round-trip times.

– Policy engine considered both GPU usage and network latency before shifting workloads.

Result: when another AI-heavy dApp launched, the system scaled both rollup infra and AI GPUs in sync, keeping UX smooth.

—

Architecture in more detail: a mental blueprint

Components you’ll usually see

A robust setup tends to include:

– Control plane

– Policy engine (rules, SLOs, forecasts)

– Orchestrators (Kubernetes, Nomad, custom schedulers)

– Provisioning adapters (cloud APIs, bare-metal automation)

– Data plane

– Blockchain nodes (full/archival, validators, sequencers, RPC)

– AI workers (inference servers, feature pipelines, vector DBs)

– Indexers and ETL pipelines

– Caches and routing layers (API gateways, RPC balancers)

– Observability stack

– Metrics collector (Prometheus, OpenTelemetry, custom agents)

– Logs and traces

– Alerting (still useful even if scaling is autonomous)

In text diagram form:

“`

[Policy Engine]

|

+————+————-+

| |

[Infra Orchestrator] [Routing Layer]

| |

+————+———-+ +——-+——–+

| | | |

[Blockchain Cluster] [AI Cluster] [Indexers & DBs]

| |

[RPC / Sequencer] [Inference APIs]

“`

The autonomous bit is that once your SLOs and policies are defined, the policy engine controls everything downstream with minimal human intervention.

—

Case 3: Enterprise consortium chain with ML compliance checks

The situation

A consortium of financial institutions ran a permissioned chain.

Every transaction required ML-based compliance and fraud checks before being finalized on-chain.

Their first iteration:

– Compliance ML service as a single cluster.

– Fixed number of validation nodes.

– Heavy reliance on scheduled updates and maintenance windows.

Whenever a new regulatory rule came in, they:

1. Redeployed models.

2. Manually increased capacity for a few days “just in case”.

3. Then forgot to scale back down.

Costs spiraled. Meanwhile, transaction latency during quarterly reporting seasons still broke SLAs.

Turning compliance into an autonomously scaled AI service

Because this was a heavily regulated environment, they worked with an external enterprise AI blockchain infrastructure provider that specialized in privacy, logging, and auditability.

Autonomous scaling changes included:

– Per-rule metrics and routing

– Different compliance rules mapped to different ML pipelines.

– Each pipeline scaled independently based on its own traffic and complexity.

– Cost-aware scaling

– Models with high GPU requirements were only scaled up when necessary.

– Certain low-risk checks fell back to cheaper CPU-based heuristics under extreme load.

– Capacity reservations for peak windows

– The policy engine was fed a calendar of known peak periods (e.g., end of quarter).

– It pre-allocated capacity but would automatically release unused resources after.

Outcomes:

– Transaction SLA (sub-second average) was met even under 3× peak load.

– Compliance team had full audit logs of why and when capacity changed.

– Ops team finally had predictable, explainable cloud bills.

—

How this compares to more “traditional” approaches

Versus manual DevOps on generic cloud

Many teams start with:

– Handwritten Terraform / Helm charts

– Manual “scale this deployment” buttons in cloud UIs

– Ad-hoc scripts to add nodes or GPUs

That’s fine until:

– You have multiple dApps with very different load patterns.

– AI workloads get spiky, unpredictable, and cross-region.

– Business requirements add strict SLAs.

Where an autonomous platform wins:

– Consistency — scaling decisions are policy-driven and repeatable.

– Speed — reaction times in seconds or minutes instead of hours.

– Focus — core devs work on features, not infra babysitting.

Versus generic PaaS (non-blockchain, non‑AI focused)

A generic PaaS can autoscale web services. But for AI-enabled blockchains, you need:

– Awareness of blockchain semantics (block times, mempool, gas, forks).

– Handling of GPU-heavy AI workloads and data locality.

– Knowledge that some operations are finality-critical (e.g., sequencer must not be starved) while others are “best-effort” (AI hints in a game).

A domain-specific platform knows the difference between:

– “RPC endpoint for a block explorer” (okay to slow slightly)

– “AI service used for consensus-critical validation” (must never fall over)

—

Practical tips if you’re designing your own

Start with clear SLOs

Before writing scaling rules, define:

– Max acceptable latency for:

– On-chain transactions

– Off-chain AI calls

– Maximum error rates

– Cost ceilings per period

Without hard numbers you’ll either massively overprovision or accept silent performance degradation.

Separate scaling dimensions

Avoid single knobs like “scale everything by 2×”. Instead, treat:

– Validators / sequencers

– RPC nodes

– AI inferencers

– Indexers / ETL

as separate pools with their own metrics and policies.

Use dry runs and guarded rollout

Autonomous doesn’t mean reckless. Implement:

– Safety limits (max nodes, max GPUs, region caps)

– Dry-run mode (log proposed actions before applying)

– Canary regions or clusters for new scaling rules

—

When to adopt an autonomous platform vs building in-house

You probably want a specialized platform or provider when:

– Your product’s main value is AI or blockchain logic, not infra wizardry.

– You’re dealing with multi-region deployments and regulatory requirements.

– Customers demand strict SLAs, but your team is small.

Building this from scratch can be justified if:

– Infra excellence is a differentiator for you (e.g., you are the infra company).

– You need highly custom control over hardware, regions, or regulation.

– You have a dedicated SRE/infra team with deep AI + blockchain experience.

Even then, many such teams start from a base PaaS and extend it instead of reinventing every wheel.

—

Closing thoughts

Autonomous platform scaling for AI-enabled blockchains is less about fancy buzzwords and more about brutally practical concerns:

– Don’t wake the SRE at 3 a.m. because a game went viral.

– Don’t lose users because your on-chain AI took 5 seconds to respond.

– Don’t blow your budget with overprovisioned GPUs.

Treat your blockchain, AI workloads, and data pipelines as one living system.

Instrument it properly, set clear SLOs, and let an autonomous control plane make 90% of the scaling decisions for you.

Then your engineers can focus on what actually matters: better models, smarter contracts, and products users care about—while the platform quietly keeps the lights on and the chain humming.