Why decentralized AI governance suddenly matters

When people talk about AI today, they usually mean accuracy, speed и shiny demos. Governance and privacy sound boring until something leaks, models hallucinate about real users, or regulators ask, “Who approved this system?” Decentralized AI governance for privacy-preserving systems tries to solve exactly that: how to control powerful models without handing all the keys — and all the data — to one company. Instead of a single owner, multiple actors share decision‑making, and technical safeguards make it hard for anyone to quietly break the rules or spy on sensitive data.

Step 1: Grasp the core idea of decentralized AI governance

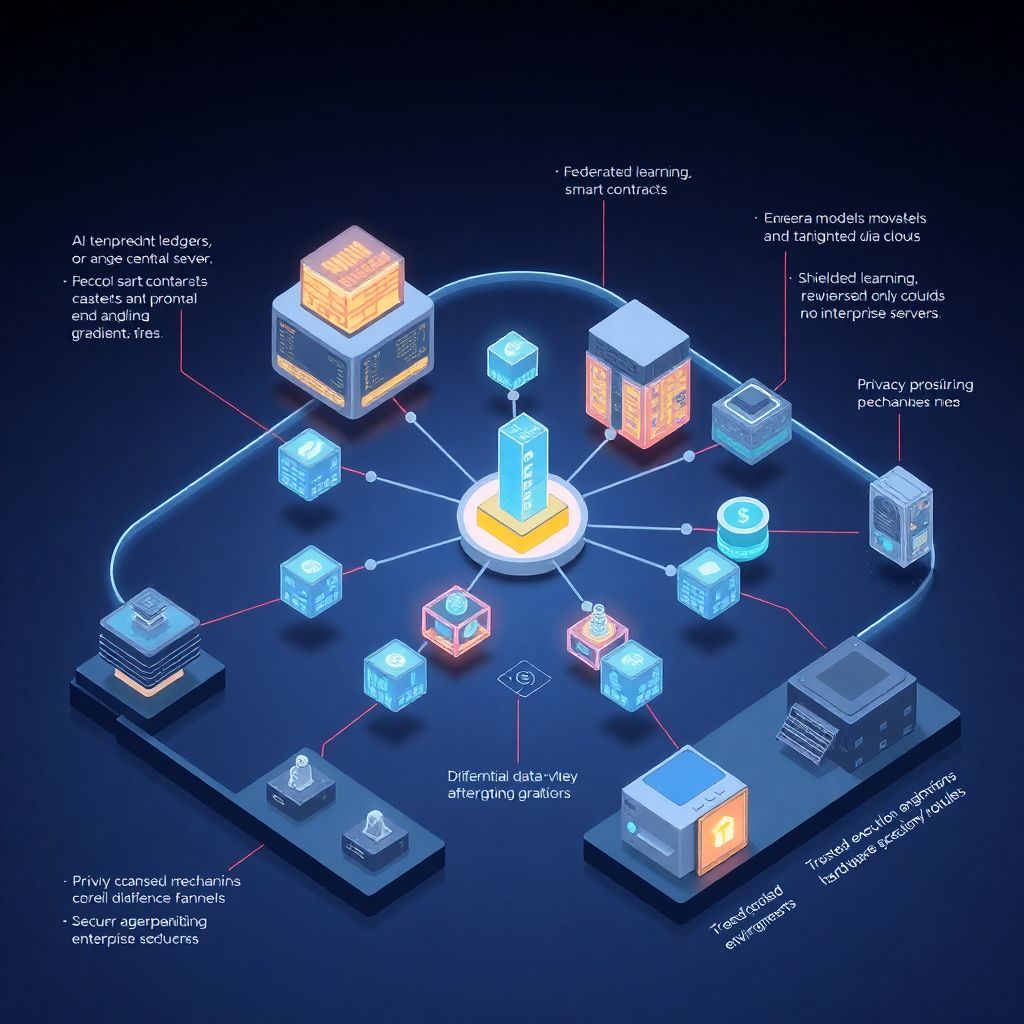

Start with a simple picture: traditional AI governance is like a central bank; decentralized AI governance is more like a cooperative with strong rules coded into the infrastructure. Policies about data usage, model updates, audit rights and access are defined collectively and executed automatically when possible. Modern decentralized ai governance platforms blend cryptography, smart contracts, and transparent logs, so every change to the model or dataset is traceable. For beginners, the key mental shift is this: you’re not just “using an AI model,” you’re joining a shared governance process where technical and human rules are tightly coupled.

Step 2: Connect governance with privacy, not treat them separately

A common rookie mistake is to treat privacy and governance as two independent checkboxes. In reality, most privacy failures are governance failures in disguise: no clear rules about who can train on what, who can override safeguards, or how to review risky deployments. For example, privacy preserving ai solutions for enterprises only work if governance mechanisms can enforce policies across teams, vendors and cloud regions. Think of governance as the nervous system that tells every component — storage, training, inference, monitoring — how to behave with personal or sensitive data, and what happens if something goes off script.

Step 3: Use blockchain where it adds trust, not just buzzwords

There’s a lot of hype around blockchain based ai governance tools, and experts are blunt about it: a chain won’t fix sloppy thinking. The real benefit appears when you need tamper‑evident logs of who changed which model, what data was used, and what the approval pathway looked like. Smart contracts can encode rules such as, “No new training run on health data unless at least three independent stakeholders sign off.” However, storing raw data or full models on‑chain is a serious performance and privacy trap. The chain should track decisions and proofs, while encrypted data and models live in more conventional secure storage.

Step 4: Build on federated and privacy‑preserving learning

Instead of sucking all data into a single warehouse, federated learning privacy preserving ai services send the model to the data. Devices or local servers train on their own slices, and only gradients or updates flow back, often protected with techniques like secure aggregation or differential privacy. Experts insist this is not magic: metadata, model updates and side channels can still leak information if you’re careless. Strong governance requires rules about how often clients can update, how outliers are handled, and when a client that behaves strangely is quarantined or excluded. Otherwise, attackers can poison the model or reconstruct sensitive patterns over time.

Step 5: Design a secure decentralized AI infrastructure for data privacy

Once you understand the building blocks, you can think about architecture. A secure decentralized ai infrastructure for data privacy typically combines hardware security modules or trusted execution environments, encrypted communication between all nodes, access control based on roles and verifiable identities, and audit layers that log every sensitive action. Newcomers often underestimate the boring pieces — key management, backup strategies, revocation of compromised credentials. Yet this is where many breaches happen. The expert tip here is to treat every operational decision as a governance decision: if someone can silently bypass a control during an outage, that’s a governance bug, not just a technical workaround.

Step 6: Define on‑chain and off‑chain governance roles

Decentralization does not mean anarchy. You still need stewards, reviewers, incident responders, but their powers and incentives are explicit. Many decentralized ai governance platforms separate “on‑chain” roles (who can vote, propose updates, or change parameters) from “off‑chain” responsibilities like red‑team evaluations and legal compliance. Experienced practitioners recommend starting with a simple model: a small council with rotating seats, a clear conflict‑of‑interest policy, and mandatory publication of rationales for major decisions. As the system matures, voting weights can diversify, but the habit of explaining and documenting choices should be in place from day one.

Step 7: Avoid the classic governance and privacy pitfalls

Certain errors repeat in almost every early‑stage project. Teams fall in love with cryptography and forget threat modeling, assuming attackers behave politely. Governance tokens get concentrated in the hands of a few insiders, making “decentralization” mostly cosmetic. Privacy policies are written once and never encoded into the technical stack, so engineers bypass them under deadline pressure. Experts warn especially against logging too much: verbose logs that include raw inputs or debugging dumps can quietly undermine even advanced privacy preserving ai solutions for enterprises. The fix is disciplined minimization: log only what you need for accountability and incident response, and protect those logs like production data.

Step 8: Practical tips for newcomers building real systems

If you’re just starting, don’t aim for a perfect, global network. Begin with a small consortium — maybe a few departments or partner organizations — and pilot limited blockchain based ai governance tools around a single use case, such as model updates for a recommendation system with customer data. Introduce privacy‑preserving techniques incrementally: first strict access control, then encrypted storage, then optional differential privacy on specific metrics. Bring privacy engineers, security specialists and domain experts into governance discussions early; leaving it to “the AI team” alone is an invitation to blind spots. Treat every pilot as a learning lab, with explicit post‑mortems and transparent reports.

Step 9: What experienced experts quietly prioritize

People who’ve shipped privacy‑sensitive AI at scale often focus less on fancy algorithms and more on incentives and clarity. They ask: who has the power to say “no,” and is that power real or symbolic? How are whistleblowers protected if they spot misuse? Are participants rewarded for reporting anomalies rather than hiding them? Seasoned architects of federated learning privacy preserving ai services emphasize human‑understandable rules: if stakeholders can’t explain in plain language when data will be used, by whom, and how it’s protected, something is wrong. The technology should strengthen trust, not demand blind faith in cryptographic jargon.

Step 10: Planning for regulation and long‑term resilience

Regulators worldwide are converging on the idea that AI needs clear accountability trails and strong privacy guarantees. Decentralized AI governance gives you a head start, because auditability and shared control are built in instead of patched on later. Still, systems age: keys get outdated, cryptographic assumptions change, new attacks appear. Robust governance frameworks include planned upgrade paths, sunset clauses for risky components, and clear criteria for when a model must be retrained, retired or segmented away from sensitive data. Treat your governance rules as living code and living policy, evolving alongside the models they’re meant to keep honest.