Why smart contracts suddenly matter for healthcare data in 2025

If you work anywhere near healthcare data, you’ve probably noticed: in 2025, everyone wants AI, everyone fears leaks, and everyone is tired of “pilot projects” that never scale.

Between aggressive AI rollouts, stricter regulators, and burned-out IT teams, the old “just sign another BAA and send us the data” model is collapsing. That’s where smart contracts for healthcare data sharing are quietly moving from conference slides to production systems.

Let’s walk through what’s actually happening right now — without the hype — and where smart contracts + AI really make sense (and where they don’t).

—

The core problem: AI needs data, hospitals need control

Hospitals, insurers, and research centers sit on petabytes of data that AI models crave: imaging, lab results, EHR timelines, claims histories, genomics.

But three stubborn blockers keep coming up:

1. Legal risk: Data sharing agreements are slow, ambiguous, and often outdated by the time lawyers sign them.

2. Operational chaos: Every partner has a different API, data format, and security posture. Integration teams are drowning.

3. Trust gap: Nobody really knows *who* used *which* data *for what* once it leaves their perimeter.

By 2025, simple encryption and access control lists aren’t enough. Regulators now expect traceability, explainability, and provable policy enforcement, especially around AI. That’s the niche where smart contracts for healthcare data sharing start to look like more than a buzzword.

—

What smart contracts actually do in this context (no fluff)

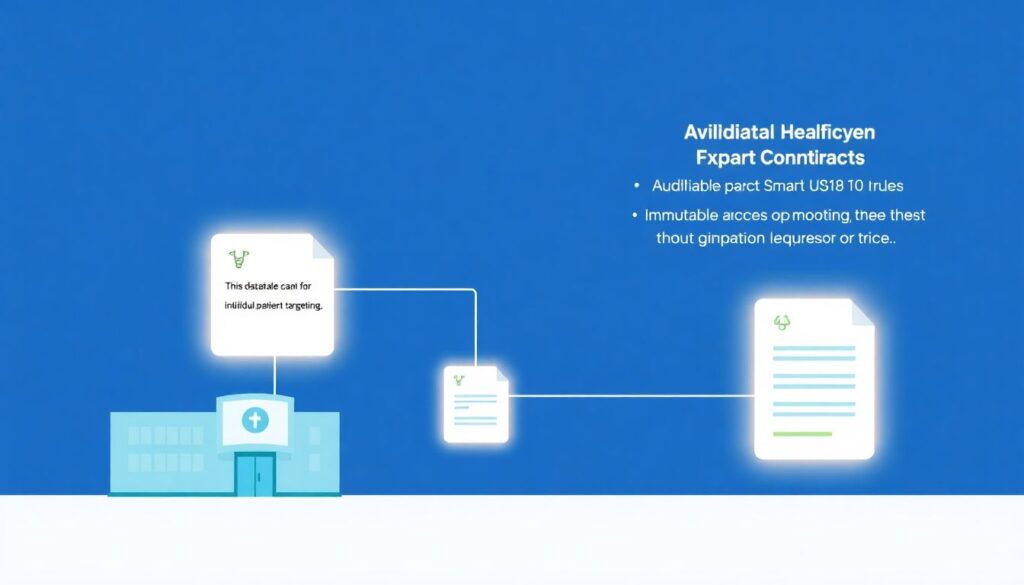

Forget the crypto noise for a second. In healthcare, smart contracts are mostly being used as:

– Automated policy engines: “This dataset can be used for model training, but not individual patient targeting.”

– Auditable access logs: Immutable records of which organization accessed which dataset and under what legal basis.

– Conditional consent managers: If a patient withdraws consent, the contract can automatically revoke access for future AI training runs.

In other words, a healthcare data interoperability smart contract platform doesn’t store entire medical records on-chain (you really don’t want that). It stores:

– References / hashes of datasets

– Permissions and constraints

– Usage events

– Sometimes payment or incentive rules

The actual sensitive data stays in secure, off-chain environments, but behavior around that data is governed and tracked via blockchain logic.

—

Real-world use cases that actually made it to production

1. AI radiology collaborations without the VPN nightmare

One European hospital network (we’ll skip names, but the model is real) wanted to train shared AI models for CT and MRI anomaly detection with three vendors and two universities. Their issues:

– Different countries, different privacy laws

– No one wanted to expose internal networks

– Manual logging of data access was a compliance disaster

They deployed an AI healthcare data sharing platform using blockchain, where:

1. Each imaging dataset was registered via a smart contract with:

– Allowed purposes (research vs clinical vs commercial)

– Retention time

– De-identification status

2. AI training jobs had to request access through the contract.

3. The contract validated:

– Is the requester an approved participant?

– Is the declared purpose allowed for this dataset?

– Have retention and geographic rules been respected?

4. Every access was immutably logged. Auditors got near real-time dashboards instead of Excel exports six months later.

Short-term result: less time arguing with legal, more time actually training models. Long-term: their radiology department now has a provable history of how data contributed to each model, which is gold for AI accountability.

—

2. Outcome-based payments for AI-assisted chronic care

Another live trend in 2025: value-based contracts for AI tools in chronic disease management.

An insurer, a primary care group, and an AI startup set up a shared registry for diabetic patients using an app. The twist: the AI vendor only gets full payment if A1c levels drop below a certain threshold for a defined patient cohort.

They used blockchain smart contracts medical data security mechanisms to:

– Link *de-identified* patient outcome metrics to contract logic

– Automatically calculate whether performance targets were met

– Trigger partial or full payouts based on verifiable data, not PowerPoint promises

No claims data went on-chain, but hashed aggregates and signed proofs did. That was enough to automate payment and reduce the usual disputes about “your data vs our data.”

—

3. Patient-driven research consortia

Patients in rare-disease communities have become data-literate and impatient. They don’t want to “donate” their data once and lose control forever.

Some consortia are experimenting with smart contracts where:

– Patients opt in via a portal that connects to an off-chain consent registry.

– The smart contract:

– Tracks which trials and AI studies can use that person’s de-identified data

– Enforces revocation: new AI training jobs must check the current consent state

– Optionally routes micro-incentives or access to trial results back to participants

The twist here is political, not just technical: sponsors can prove they honored withdrawal of consent, even when multiple CROs and AI vendors are involved.

—

Non-obvious design decisions that separate pilots from platforms

Many early projects failed not because of the tech, but because they modeled reality too simplistically. By 2025, a few “hard lessons” have crystallized.

1. Don’t encode law; encode *interpreted policy*

Regulations like HIPAA or GDPR are dense, evolving, and subject to interpretation. Trying to hard-code “HIPAA rules” directly into a smart contract is a recipe for rigidity.

Teams that succeed do something else:

– Governance committees define operational policies:

– “De-identified data from US patients may be used for commercial AI training if BAA + consent type X are in place.”

– Smart contracts then enforce *those* policies, not the full legal text.

– When interpretations change, they upgrade the policy layer, not the entire blockchain.

If you hear someone promise “fully automated compliance on-chain,” that’s usually a red flag. In practice, we’re automating *controlled parts* of the compliance workflow.

—

2. Multi-layer identity is mandatory

Early on, some projects made the mistake of tying everything to a single organizational identity: “Hospital A,” “Vendor B.”

Modern architectures distinguish at least three layers:

1. Organization (the legal entity)

2. System (EHR instance, AI training cluster, data lake)

3. Process or job (specific ETL, training run, query)

Smart contracts then grant permissions not to “Hospital A” in general, but to a specific type of job run by Hospital A’s certified infrastructure. This is crucial once you start giving external AI vendors controlled access to run computations in your environment.

—

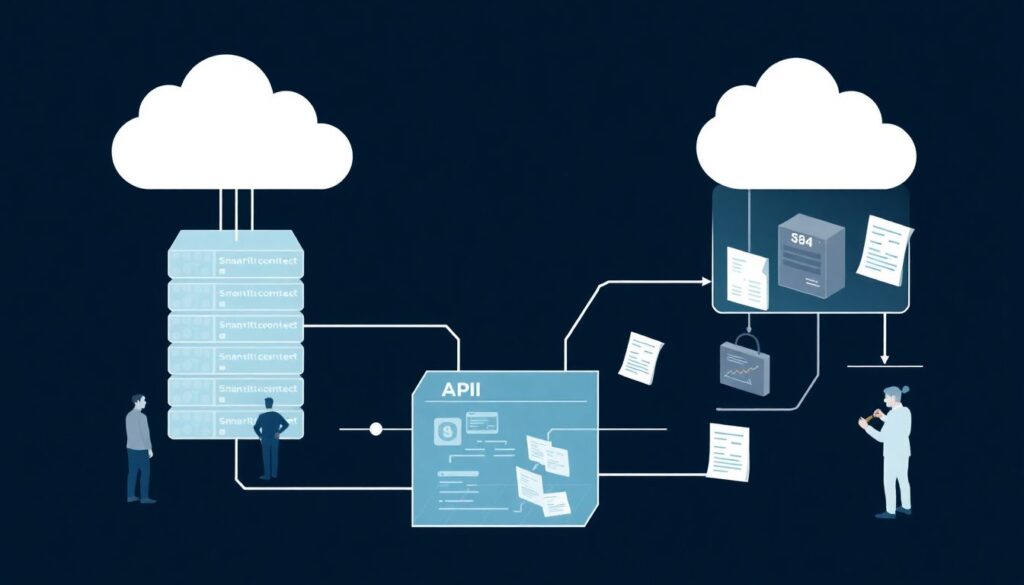

3. Off-chain computation with on-chain commitments

Running heavy AI or analytics directly on-chain is impractical and unnecessary. The more pragmatic pattern in 2025:

– Compute runs in a secure enclave / container / confidential VM.

– At the end of a job, it:

– Produces a signed report of which datasets were accessed

– Optionally produces a cryptographic proof (e.g., ZK-based for especially sensitive contexts)

– Submits a summary and hash of logs to the smart contract

– The smart contract:

– Verifies constraints (allowed purpose, time windows, cohorts)

– Records a tamper-evident footprint of the job

This balances performance, cost, and auditability. You’re not uploading gigabytes of logs to a chain, but you do anchor evidence that logs haven’t been quietly altered.

—

Where smart contracts are *not* a silver bullet

Before going all-in on an HIPAA compliant smart contract solutions for healthcare initiative, it’s worth being blunt about what these systems don’t fix:

– Bad input data: If your source systems are messy, duplicative, or inaccurate, smart contracts just make your bad data more traceable, not more useful.

– Broken consent capture: If front-line workflows aren’t capturing the right consents with the right granularity, no contract will magically invent them.

– Organizational politics: Territorial fights over “data ownership” don’t disappear; you just get better tooling to formalize agreements.

This is why many successful programs pair smart contract deployments with boring but vital projects: data governance councils, terminology harmonization, clinical documentation clean-up, and staff training.

—

Alternative approaches: do you really need blockchain here?

Smart contracts are one way to solve the policy + audit problem, but they’re not the only path. In 2025, you’ll usually see three competing patterns.

1. Centralized policy engines with audit trails

Many large hospital systems use centralized authorization servers or API gateways that enforce policies and log access. They’re:

– Easier to deploy initially

– Familiar to existing IT teams

– Often cheaper in the short term

Downside: they require trust in a single operator and are harder to extend across independent organizations that don’t fully trust each other.

—

2. Federated learning without explicit data sharing

Some organizations sidestep the whole “sharing raw data” conversation by moving the AI training to the data sources instead:

– Each hospital keeps data on-prem.

– A central coordinator sends models to train locally.

– Only gradients or model updates come back.

Federated learning can reduce legal and psychological friction, but it still needs robust access control, consent checks, and logging. Interestingly, smart contracts are now being combined with federated learning to manage *who* can trigger training rounds, *under what consent umbrella*, and *with which model versions*.

—

3. Data clean rooms and privacy-preserving computation

Another alternative is the “clean room” model:

– Data from multiple parties is loaded into a neutral, controlled environment.

– Only approved aggregate outputs can exit.

– Technologies like differential privacy, secure multi-party computation, and homomorphic encryption are often involved.

Again, these tools answer a different part of the puzzle: *can we compute on sensitive data safely?* Smart contracts answer: *under what rules, and who is accountable?* The strongest platforms now combine both.

—

Modern trends in 2025: what’s changed in the last 2–3 years

If you looked at this topic back in 2021 and walked away unimpressed, that was fair. The landscape has matured a lot since then.

Trend 1: From crypto-blockchains to regulated, permissioned networks

Today’s healthcare deployments almost never rely on public, anonymous chains. Instead, you see:

– Consortium, permissioned blockchains: only verified participants (payers, providers, regulators, sometimes patient orgs) run nodes.

– No speculative tokens: incentives are contractual and fiat-based; the chain is just the coordination layer.

– Regulator visibility: In some regions, regulators or auditors operate observer nodes, gaining near real-time oversight.

This shift has made conversations with compliance and risk committees much easier.

—

Trend 2: AI model governance tied to data lineage

In 2025, AI regulation is catching up. Authorities increasingly ask:

– Which datasets trained this model?

– Under what consent and purpose restrictions?

– How do you handle “right to be forgotten” or consent withdrawal in model retraining?

Smart contracts now link data usage events to model versions. So when someone asks “who contributed to v3.4 of this sepsis prediction model?”, you don’t scramble for ad-hoc logs; you point to a verifiable trace anchored on-chain.

—

Trend 3: Interoperability-first, not blockchain-first

Earlier projects tried to build everything “on blockchain” and only later worried about integration with EHRs, FHIR servers, and HL7. That approach mostly failed.

The newer generation of platforms leads with:

– Strong FHIR / HL7 support

– Alignment with existing HIEs

– Clear APIs for integration into EHR workflows

The healthcare data interoperability smart contract platform is now usually *behind* the scenes: a compliance and coordination layer rather than the main interface clinicians see.

—

Practical tips and “pro” lifehacks for professionals

If you’re evaluating or designing a data-sharing initiative in 2025, a few hard-earned lessons can save months.

1. Start with one high-value, low-politics use case

Don’t begin with “all oncology data across all sites.” Too messy, too political. Instead:

1. Pick a narrow, high-impact domain (e.g., stroke imaging, ICU deterioration prediction, medication reconciliation).

2. Limit stakeholders to a 2–3 organization cluster.

3. Make sure success can be measured in < 12 months (improved model performance, fewer manual audits, or shorter contracting cycles).

Once you have a working pattern, extend horizontally.

---

2. Separate “what is legal” from “what we are comfortable with”

Legal teams often say, “In theory this is allowed under HIPAA, but we’re not comfortable with that risk profile.” Capture that nuance in policy layers:

– Legal baseline: what is strictly permitted by regulation and contracts.

– Org policy: what your risk committee actually authorizes.

– Smart contract rules: the codified subset of org policy that you want enforced automatically.

This layered model avoids deadlock and keeps you honest about trade-offs.

—

3. Make your logs human-readable first, cryptographic second

Engineers love hashes, signatures, and Merkle trees. Auditors don’t.

A pragmatic pattern:

1. Design logs that a compliance officer can understand without a cryptography course:

– “AI Job #5678, run by Vendor X, accessed Dataset Y (de-identified oncology records) under Consent Policy Z on 2025-03-12 for ‘model retraining’.”

2. Then anchor a hashed representation of those logs via your smart contract.

Cryptographic guarantees are only useful if people can interpret what’s being guaranteed.

—

4. Don’t oversell “trustless” systems

Healthcare doesn’t want “trustless”; it wants structured, accountable trust. When pitching or architecting:

– Emphasize shared, verifiable records, not the removal of trust.

– Highlight how disputes get easier to resolve because evidence is jointly managed.

– Clarify boundaries: some things still depend on off-chain controls and human processes.

Overselling blockchain magic is the fastest way to lose clinicians’ and regulators’ patience.

—

5. Build for partial adoption

You won’t get every partner onto your new infrastructure on day one. Design systems where:

– Some participants use the full smart contract stack.

– Others interact via more traditional APIs and legal agreements, but their interactions are still logged and partially governed.

This lets you show value early while slowly migrating more parties into deeper integration.

—

How to decide if smart contracts are worth it for your project

To keep things grounded, here’s a quick decision lens. If most of these are true, smart contracts + AI are probably worth serious consideration:

1. Multiple independent organizations need to share or co-use data long term.

2. You expect evolving policies, consents, and regulations over several years.

3. AI plays a central role, and you’ll need robust model lineage and accountability.

4. You’ve been burned by opaque or manual logging and auditing in the past.

5. No single party is trusted or neutral enough to run a purely centralized solution.

If instead you’re talking about a single hospital system, with purely internal use of AI models and straightforward data flows, a simpler centralized architecture might be faster and cheaper — at least for now.

—

Bottom line

By 2025, smart contracts for healthcare data sharing have moved past the proof-of-concept phase in a growing number of AI-heavy projects. They’re not replacing data governance, legal review, or security programs — they’re giving those functions better tools to express and enforce decisions in a multi-party world.

The most successful implementations:

– Treat blockchain as an infrastructure component, not the product.

– Focus on policy automation and traceability, not speculative tokens.

– Integrate deeply with existing interoperability standards and AI workflows.

If you frame them as a way to tame the chaos of cross-organizational AI data use — rather than as a shiny technology looking for a problem — you’ll have a much easier time getting clinical, legal, and operational stakeholders on board.