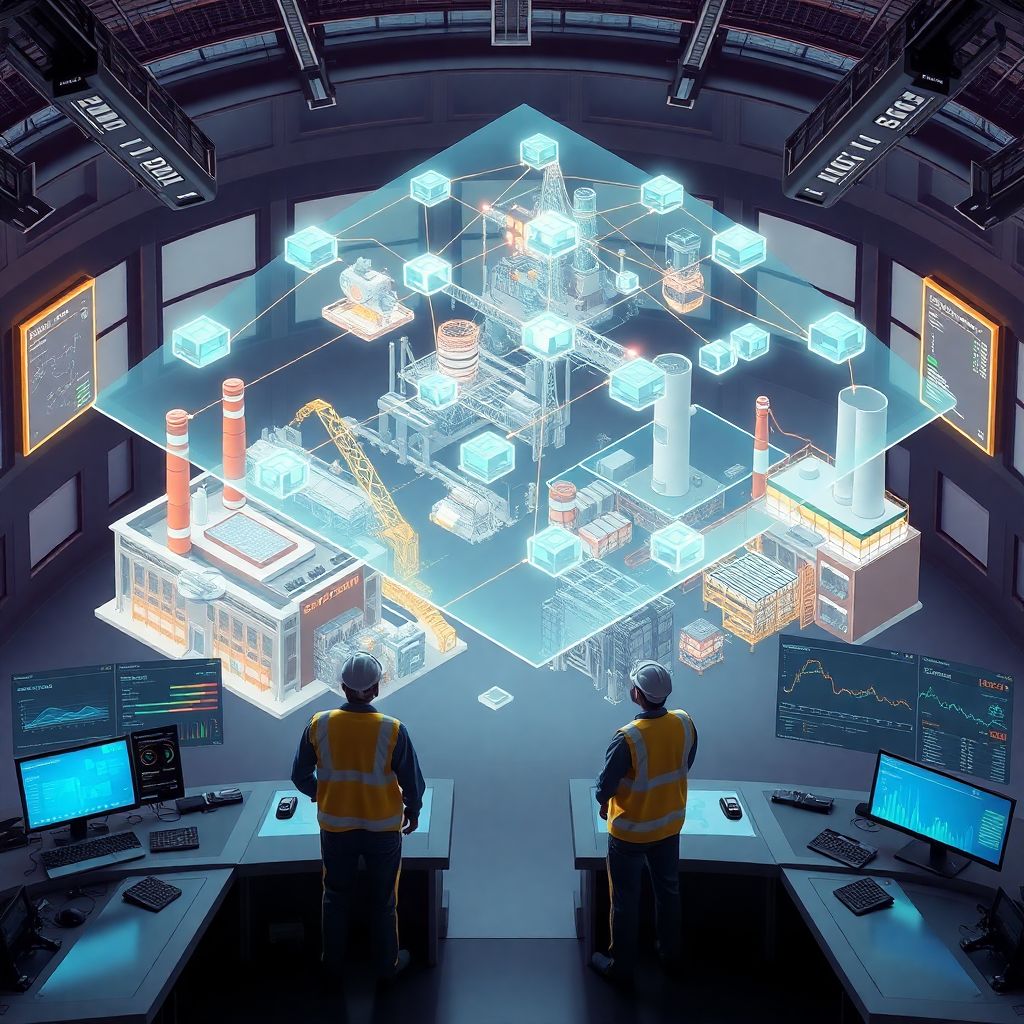

Why AI‑powered digital twins matter right now

Imagine your factory, power plant or logistics hub living a second life — inside a computer — where you can speed up time, break things, roll back, and start again without risking a single real‑world bolt. That’s essentially what AI‑powered digital twins for industrial ecosystems offer. But the real magic happens when you combine this virtual mirror with data‑hungry models that learn patterns you’d never notice manually. Suddenly, questions like “When will this pump fail?” or “What happens if I switch suppliers?” stop being guessing games and turn into testable scenarios. And yes, you can get there without rebuilding your entire tech stack from scratch, if you approach it smartly and pragmatically with a few unconventional tricks.

Necessary tools: go beyond the usual suspects

Most guides say you need an industrial digital twin platform powered by AI and leave it at that, but in practice, you’ll want a slightly more eclectic toolkit. Of course, you’ll need solid AI digital twin software for industrial automation that can ingest sensor data, PLC tags and historian streams. Add a cloud or hybrid environment where you can scale training jobs without begging IT for more servers every quarter. You’ll also need a decent time‑series database, an event bus like Kafka or MQTT, and a graph database if you want to model relationships between machines, lines and suppliers. Don’t forget the unglamorous tools: a feature store for sharing signals across models, a simple experiment tracker so engineers can reproduce results, and a lightweight UI framework so operators see insights in the same console they already trust.

Non‑standard tools that give you an edge

Here’s where it gets interesting: bring in a game engine or 3D visualization framework, not just CAD viewers, if you care about human understanding and training. Tools like Unreal or Unity (or industrial‑grade equivalents) can host your twin’s visual layer, while the AI and physics models live elsewhere. Another unconventional move: use a “chaos lab” environment, basically a controlled sandbox where you intentionally feed noisy or partial data into your AI models to see how gracefully they degrade. That’s how you avoid brittle AI-based digital twin solutions for manufacturing plants that look great in demos but panic as soon as a sensor drops out. Finally, a low‑code automation tool can help operations teams wire insights from the twin back into workflows (work orders, purchase requests, shift planning) without waiting months for custom development.

Step‑by‑step process: build the twin like a living product

Skip the temptation to design a giant “master twin” from day one. Instead, treat your first enterprise AI digital twins for industrial IoT ecosystems like a lean product: narrow scope, fast feedback, constant iteration. Start by picking one annoying, expensive problem: chronic unplanned downtime of a compressor, quality drift on one production line, or energy waste in a boiler system. Map only the assets and signals relevant to this problem: tags from PLCs, maintenance logs, operator comments, maybe external context like weather or supplier changeovers. Build a minimal virtual model that understands states (running, idle, startup, cleaning) and transitions. Then gradually add physics, heuristics, and finally AI layers. At each step, ask: “Did this actually change a decision for someone on the shop floor or in planning?”

From raw data to an intelligent twin

Start ugly: extract data from existing historians, SCADA, MES, even spreadsheets. Normalize time stamps, align units, and tag data with asset IDs, not just line names like “Press‑01”, so you can reuse components later. Next, create a baseline “dumb twin”: a rules‑driven model mirroring basic behaviour — setpoints, limits, start/stop logic. Only then layer ML models: anomaly detection on vibration or temperature, short‑horizon forecasting of throughput, and eventually predictive maintenance with AI-powered industrial digital twins that estimate remaining useful life. Use feedback loops ruthlessly: every operator confirmation, maintenance outcome, or override is training data. Log it, label it, and feed it back to continuously retrain the AI. This is how a bland dashboard grows into a twin that feels like a colleague who actually knows your plant.

Closing the loop with real operations

A twin that only reports insights is just an expensive analytics project. To make it operational, wire it into decision points. For example, let the twin automatically propose changes to maintenance schedules, but require a human to approve them for the first three months. Measure the difference in downtime and spare part use between “twin‑guided” and “business‑as‑usual” assets. In logistics, let the twin suggest different loading patterns or routes based on live conditions. Over time, push decisions closer to autonomy: first recommendations, then auto‑execution with human veto, and only then fully automated loops for low‑risk actions like parameter fine‑tuning or reorder triggers. Always keep an easy “big red switch” — operators must be able to revert to standard rules in seconds if the twin misbehaves or context changes suddenly.

Unconventional design patterns for industrial twins

Most deployments focus on single machines or single plants, but AI-powered digital twins for industrial ecosystems get truly interesting when they span suppliers, warehouses and customers. One non‑standard pattern is the “supply‑aware twin”: the virtual plant knows delivery reliability of each supplier, typical lead times, and quality variability, and simulates production plans under disruptions. Another is the “energy‑trading twin” that couples your factory with real‑time grid prices and emissions intensity, shifting non‑critical loads to greener or cheaper windows. You can also think in terms of “learning twins”: deploy a twin at one plant, harvest patterns, then transfer those models to plants in other regions like a knowledge package, adjusting only the local constraints and sensor mappings, instead of rebuilding everything every time.

Composable micro‑twins instead of one giant model

A practical way to keep complexity under control is to build micro‑twins — self‑contained models for a specific asset or process step — and connect them like Lego bricks. One micro‑twin might represent a furnace’s thermal behaviour; another, the material handling system; a third, the workforce schedule. When reality changes, you swap or retrain a micro‑twin without tearing down the entire structure. This is especially useful when using AI digital twin software for industrial automation that supports modular APIs. It also helps with governance: different teams can own and maintain their part of the model, while a small core group defines the contracts and data interfaces. Over time, you end up with a library of reusable process building blocks rather than fragile one‑off projects that become impossible to maintain when a single expert leaves.

Let operators “hack” the twin

Instead of locking the digital twin behind IT or data science, give operators and maintenance engineers ways to tweak assumptions themselves — simple rule editors, what‑if sliders, or drag‑and‑drop scenario builders. The unusual idea here is to treat the twin like a shared notebook of tribal knowledge. When an operator notices that a motor usually fails after a certain sound pattern or cleaning sequence, they should be able to encode that as a hypothesis, which the AI then tries to validate against historical data. You don’t just get better predictions; you also create buy‑in because the twin becomes partly “theirs” instead of a black box from headquarters. This collaborative approach is what often turns AI-based digital twin solutions for manufacturing plants from passive monitors into active partners on the shop floor.

Troubleshooting: when your digital twin misbehaves

Things will go wrong. The twin will sometimes flag phantom issues, miss real failures, or suggest plans that look smart in simulation but fall apart in Monday morning reality. The good news is that most failures are traceable if you think in layers: data, model, and interaction. If alarms suddenly explode in volume, suspect a data schema change or sensor drift, not a magical overnight factory crisis. If predictions go from “spooky accurate” to “random noise” after a software upgrade, check which features changed or dropped out. If operators quietly stop using the recommendations, you likely have an interaction problem: wrong timing, unclear explanation, or a UI that clashes with their daily rhythm. Treat each of these as a bug in a product, not as a one‑off incident to patch and forget.

Practical debugging tactics

When a specific prediction is wrong, run a “flight recorder” replay: feed the exact historical data snapshot into the model and log intermediate outputs. Check feature importance: if the model heavily relies on a sensor known to be flaky, you’ve found a risk. Maintain a small set of “golden scenarios” — carefully validated weeks of data with known outcomes — and run them after any model or pipeline change. For data quality, deploy watchdog models that look only for impossible patterns: negative flow rates, temperatures above material limits, assets running when main power is off. And always, always log counterfactuals: what the twin recommended vs. what humans actually did, and what happened next. These logs become your most valuable asset for safely evolving enterprise AI digital twins for industrial IoT ecosystems over time.

Knowing when to dial AI down

Sometimes the smartest move is to make the twin a bit dumber on purpose. If a process is governed by strict safety or regulatory rules, you may cap AI influence to suggestive alerts only, leaving final decisions to deterministic logic. In highly volatile environments, like plants with frequent product changeovers, simpler models with shorter prediction horizons may outperform heavy, long‑range AI forecasting. It’s fine to let traditional controls handle the boring, predictable parts while the AI focuses on edges where human intuition struggles: subtle degradation, cross‑line interactions, or non‑obvious energy trade‑offs. The mark of a mature implementation isn’t maximal AI everywhere, but a balanced system where automation, human judgment and the digital twin each play to their strengths without stepping on each other’s toes.