Why autonomous anomaly detection on-chain suddenly matters

If you work anywhere near crypto compliance, you’ve probably felt it: human-driven monitoring is hitting a wall. Volumes are up, typologies are more complex, and on-chain activity is fragmenting across L1s, L2s, bridges, and mixers. Against that backdrop, autonomous anomaly detection in financial crime isn’t a “nice to have” anymore — it’s the only way to keep up.

Over the last three years, on-chain crime has changed shape rather than simply growing or shrinking. According to Chainalysis’ Crypto Crime reports, the share of illicit crypto activity as a percentage of all on-chain volume stayed under 1% from 2022 to 2024, hovering in the low tenths of a percent. Yet in absolute terms, we’re still talking about tens of billions of dollars worth of transfers annually linked to hacks, scams, ransomware, sanctions evasion and more. In parallel, global enforcement ramped up: U.S. agencies announced multi‑billion‑dollar seizures tied to mixers and darknet markets between 2022 and 2024, and the EU’s AML package broadened expectations around continuous monitoring of virtual asset activity. Put together, this means the bar for detection is rising while the signal gets noisier.

So the key question shifts from “Can we screen addresses?” to “Can we automatically surface abnormal behavior on-chain — in real time — before it becomes an enforcement or reputational nightmare?”

What “autonomous anomaly detection” actually means on-chain

Let’s unpack the buzzword a bit. In the on-chain world, anomaly detection is about flagging behaviors that deviate from learned norms of transaction flows, wallet behaviors, and protocol usage — without needing a human to predefine every specific bad pattern.

Instead of manually crafting hundreds of rules like “flag any withdrawal above X from a mixer,” you build systems that learn typical patterns for:

– Individual wallets (frequency, counterparties, preferred assets)

– Clusters of wallets (entities, services, exchanges, bridges)

– Protocols (DEXs, mixers, lending pools, cross‑chain bridges)

An “autonomous” system doesn’t mean “no humans,” it means the models can adapt, re‑learn baselines, and escalate suspicious anomalies with minimal manual rule-tweaking. Humans still review, label, and refine — but they’re spending time on the edge cases, not drowning in obvious alerts.

Types of anomalies you care about

Anomalies on-chain usually fall into a few buckets:

– Point anomalies — one transaction looks off: a new wallet suddenly sends $5M through a high‑risk mixer.

– Contextual anomalies — behavior is odd given context: a dormant wallet wakes up, moves funds through an obscure DEX at 3 a.m., then bridges to a sanctioned region.

– Collective anomalies — a set of transactions together looks strange: dozens of low‑value transfers that, in aggregate, form a smurfing pattern to an exchange.

Your models should aim to surface all three, across multiple chains and entities — not just look for “large numbers.”

Required toolkit for autonomous on-chain anomaly detection

You don’t need a moonshot AI lab to get started, but you do need a reasonably solid stack. Think of it as three layers: data, infrastructure, and modeling.

Data sources and enrichment

At the base layer, you need reliable, near‑real‑time blockchain data:

– Full node or node‑as‑a‑service access for each target chain (Ethereum, major L2s, BTC, Solana, etc.)

– Historical transaction and log archives (for model training windows, e.g., 12–24 months)

– Entity-resolution / clustering feeds: which addresses belong to exchanges, bridges, DeFi protocols, mixers, custodians

– Sanctions, watchlists and scam address feeds from regulators, analytics vendors and internal casework

Modern blockchain analytics platform for fraud detection products can give you a head start here by bundling raw chain data with labels (e.g., “CEX deposit address,” “mixer,” “stolen funds cluster”).

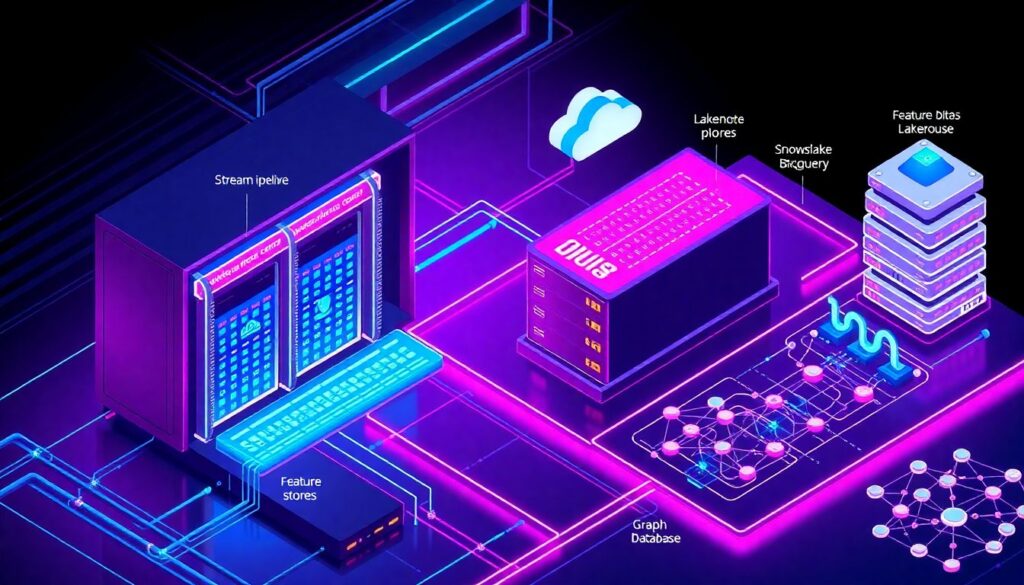

Analytics infrastructure

You’ll want an environment that can ingest, transform, and query that data efficiently:

– A streaming pipeline (Kafka, Kinesis, or equivalent) for new blocks and events

– Warehousing or lakehouse storage (e.g., Snowflake, BigQuery, S3+Spark) for historical analysis

– Feature stores and graph databases (Neo4j, TigerGraph, or managed alternatives) to model relationships between wallets and entities

– A basic CI/CD setup for your ML workflows (e.g., Airflow, Dagster, or a cloud-native orchestrator)

This infrastructure is what lets your anomaly detection models run continuously rather than as one‑off experiments.

Detection engines and AI models

At the top layer sit the actual detection engines:

– Unsupervised anomaly detection models (Isolation Forest, LOF, autoencoders, clustering) for discovering new patterns of abuse

– Graph-based models (random walks, graph neural networks, community detection) to spot suspicious subgraphs and flow patterns

– Semi-supervised models that use limited labeled fraud data plus large unlabeled datasets to refine boundaries

– Rule and heuristic layers to enforce hard regulatory constraints (e.g., direct contact with sanctioned addresses)

Many vendors now position their products as crypto anti money laundering tools with anomaly detection, but under the hood they still rely on some mix of the above techniques. Whether you build or buy, you’ll need the same conceptual pieces.

Step‑by‑step: building an autonomous on-chain anomaly detection pipeline

Let’s walk through a practical, implementation‑oriented workflow. The specifics will vary by stack, but the steps are broadly similar.

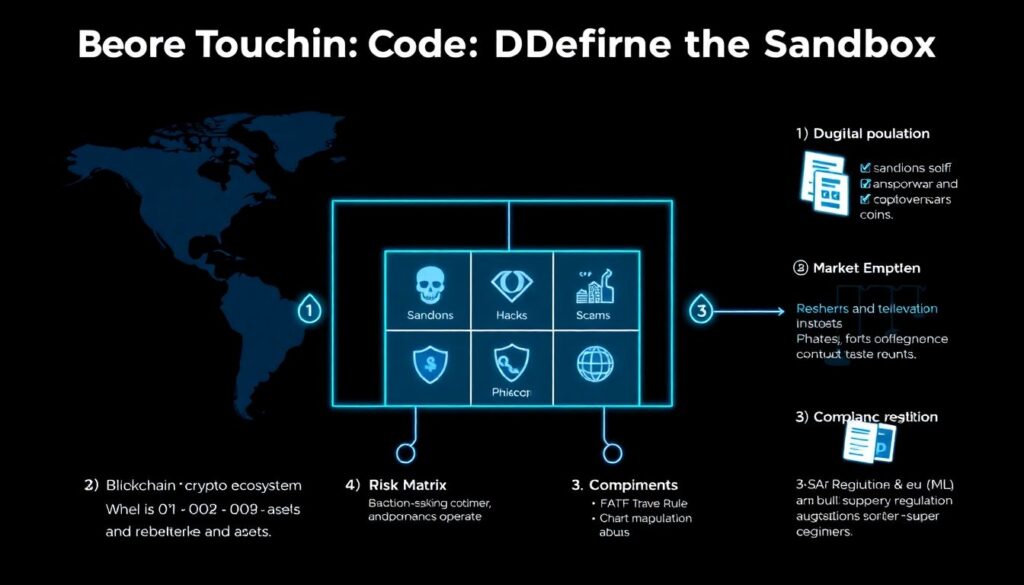

1. Clarify scope and regulatory expectations

Before touching code, define the sandbox:

1. Which chains and assets? Focus on where your customers and counterparties actually operate.

2. Which risks? Sanctions evasion, ransomware cash‑outs, hacks, scams, market abuse — or all of the above?

3. Which regulatory regimes? FATF Travel Rule, EU AML package, U.S. FinCEN guidance, local VASP regulations.

4. Latency requirements? Are you monitoring deposits in real time, or batch‑scanning historical flows daily?

This scoping drives what “good enough” looks like for your on-chain transaction monitoring solution for financial crime, both from a detection standpoint and an auditability perspective.

2. Stand up the data pipeline

Next, you wire the plumbing:

– Stream new blocks and transactions into a message bus.

– Normalize events (transfers, swaps, mints, burns, bridge events) into a consistent schema across chains.

– Periodically enrich addresses with entity labels, risk scores, and sanctions flags from your vendors and internal lists.

– Backfill at least 12–24 months of data to give your models enough context to learn.

Keep this part boring and robust. Most “autonomous” systems fail for extremely non‑glamorous reasons like missing events, partial backfills, or mis‑parsed logs.

3. Engineer features that reflect behavior, not just size

Anomaly detection is only as good as the features you feed it. Useful on-chain behavioral features often include:

– Rolling transaction counts and volumes (by asset, by counterparty type)

– Time‑of‑day and day‑of‑week activity patterns

– Diversity of interactions (how many unique contracts, how many chains)

– Graph metrics: in/out degree, clustering coefficient, PageRank, distance to known high‑risk clusters

– Path features: number of hops from sanctioned or hacked funds, typical route patterns (DEX → mixer → exchange, etc.)

Short version: you want the model to “see” a customer’s on-chain persona, not just a stream of raw numbers.

4. Train and calibrate anomaly detectors

Start with relatively simple, interpretable methods before jumping to deep learning:

– Fit unsupervised models on historical features to learn a baseline of “normal.”

– Use labeled cases (SARs, law enforcement referrals, confirmed fraud) as anchor points to see where they fall in the anomaly score distribution.

– Tune thresholds for different risk segments (retail vs institutional, VIP vs low‑activity accounts) instead of a single global cut‑off.

This is where you’ll start to notice patterns like: “ransomware cash‑outs tend to live in the top 0.5% of anomaly scores when we look at bursty behavior plus proximity to known ransomware clusters.”

5. Integrate with case management and human review

An autonomous system still needs a human‑review loop:

– Pipe high‑severity anomalies into your case management system, alongside traditional rule‑based alerts.

– Attach full context: transaction paths, entity labels, screenshots of graph views, prior alerts on the same wallets.

– Capture analyst feedback (“true positive,” “false positive,” “unclear”) and feed it back as labels for retraining and recalibration.

This closed‑loop is what slowly transforms your models from generic “oddity detectors” into institution‑specific financial crime sensors.

6. Operationalize and monitor in production

Finally, treat this like any production service:

– Track model health metrics: drift in feature distributions, alert volume, and severity mix.

– Watch business KPIs: false‑positive rates, time‑to‑decision, number of escalations to regulators.

– Version everything: data schemas, models, thresholds, and decision logic, so you can reconstruct why something was (or wasn’t) flagged six months later.

Over time, as the system stabilizes, you can expand scope to additional chains, new typologies, and emerging DeFi primitives.

How AI changes the game (and where it doesn’t)

Modern systems increasingly layer machine learning and generative components on top of traditional rules. For example, an ai powered on chain risk monitoring for exchanges platform might:

– Use graph neural networks to assign risk scores to addresses based on their neighborhood.

– Run unsupervised anomaly detection over higher‑level behavioral embeddings, not raw counts.

– Generate natural‑language summaries of suspicious patterns to help analysts understand what the model “saw.”

However, AI does not remove the need for explainability, governance, and basic operational sanity. Regulators still expect you to articulate why a customer was offboarded, why a SAR was filed, and how your system avoids discriminatory or arbitrary decisions. You should design models and features with interpretability in mind from day one.

Choosing tools: build vs buy vs hybrid

In practice, most organizations end up with a hybrid architecture. You might rely on commercial blockchain aml software for crypto compliance to cover:

– Basic sanctions screening and address reputation scoring

– Entity clustering and known illicit cluster labels

– Dashboards and case management

…while building your own anomaly layer on top: custom models that reflect your risk appetite, customer base, and jurisdiction mix. This gives you vendor‑grade coverage of known bads plus in‑house differentiation for the unknown unknowns.

If you’re choosing vendors, probe for:

– Access to raw features and APIs, not just canned risk scores

– Support for exporting labeled events for your own model training

– Transparent documentation of heuristics and model logic

This lets you plug their capabilities into your own detection pipeline rather than being boxed into a black‑box product.

Troubleshooting: why your anomaly detection might be underperforming

Even well‑funded teams regularly run into similar problems. Here’s how to recognize and fix them.

Problem 1: A flood of false positives

If your system is flagging half the chain as anomalous, it’s not useful. Common causes:

– Feature leakage from volume. High‑volume entities (major exchanges, custodians) will always look “weird” compared to small wallets. Segment your population (retail, institutional, infrastructure) and train separate baselines.

– Single‑view myopia. You’re only looking at amounts and frequency, not path context or entity types. Incorporate graph- and path‑based features to distinguish noise from genuinely suspicious routing patterns.

– No feedback loop. Analyst decisions aren’t being fed back; thresholds never get re‑calibrated.

Mitigation: introduce tiered alerting (critical, high, medium), throttle noisy segments with additional conditions, and regularly re‑fit models with up‑to‑date labels.

Problem 2: Missing obvious bad behavior

Sometimes the inverse happens: your models look smart but still miss attacks that later show up in the news.

Key causes:

– Data gaps. You’re not parsing certain DeFi protocols or bridges correctly, so funds seem to “disappear.” Verify coverage chain by chain, protocol by protocol.

– Over‑reliance on historic norms. New typologies (e.g., novel bridge exploits, fresh mixer designs) don’t look unusual yet. Combine behavior‑based models with watchlists and community threat intel.

– Too coarse a granularity. Looking only at daily aggregates can hide short, intense bursts of suspicious activity.

Mitigation: add real‑time pattern detectors for known high‑risk behaviors, tighten feature windows (e.g., 5‑minute and 1‑hour views), and run retroactive back‑tests after every major public incident to see where your system failed.

Problem 3: Model drift and silent degradation

On-chain ecosystems move quickly: new chains, new tokens, new protocols, new bridges. Your model trained on 2022 data may be badly miscalibrated by mid‑2024.

Watch for:

– A steady drop in average anomaly scores without any policy change

– Sudden changes in feature distributions after major events (e.g., airdrops, forks, regulatory news)

– Increased analyst complaints that alerts feel “off” or outdated

Mitigation: schedule regular retraining (e.g., quarterly), use rolling windows of data, and keep a small “canary” evaluation set of labeled cases to track performance over time.

Using statistics from 2022–2024 to set expectations

Let’s tie the last three years of data into practical targets.

– From 2022 to 2024, public reports consistently showed that illicit on‑chain volume stayed in the tens of billions annually, but as a fraction of all crypto activity it remained below 1%. This means your system is looking for a very small needle in a very large haystack.

– Enforcement action volumes and regulatory pressure increased over the same period, especially against mixers, unregistered VASPs, and lax KYC programs. That’s a signal that regulators expect far more continuous monitoring and proactive detection than they did even three years ago.

– Many large exchanges reported significant drops in fraud losses and compliance breaches after adopting autonomous, behavior‑based monitoring, often citing double‑digit percentage reductions in false positives and meaningful cuts in time‑to‑detect for hacks and account takeovers.

Use numbers like these internally to frame ambitions. You’re not going to catch 100% of illicit activity, but you should be able to:

– Reduce average detection time for major typologies (e.g., hacks, account takeovers) from days to minutes or hours.

– Cut false positives enough that analysts can focus on high‑severity cases.

– Demonstrate year‑over‑year improvement in coverage, precision, and response time, backed by quantitative metrics.

Practical tips for getting value quickly

In case all of this still sounds intimidating, here’s a pragmatic approach to extracting value fast:

1. Start with a narrow slice. Pick one high‑risk chain and one typology (e.g., exchange deposit monitoring for mixer‑linked flows).

2. Leverage an existing vendor. Use a vendor’s data and screening APIs as your baseline, then add a thin anomaly‑detection layer on top using your own infrastructure.

3. Prioritize explainable features. Focus first on features that map cleanly to human‑understandable concepts: distance to illicit clusters, burstiness, unusual counterparties.

4. Integrate with your analysts early. Let analysts see anomaly scores and contributing factors from day one; use their feedback as labeled data.

5. Iterate and expand. Once you’re comfortable with one use case, clone the pattern to other chains, customer segments, and risk types.

Over time, this approach turns your stack into more than just a set of crypto anti money laundering tools with anomaly detection; it becomes an evolving, institution‑specific defense system that learns from every case you touch.

—

Autonomous anomaly detection on-chain is not about replacing analysts with opaque models. It’s about giving human teams enough signal, context, and automation to stay ahead of financial crime that’s getting faster, more fragmented, and more creative every year. With the right data, tools, and feedback loops, your monitoring can move from “reactive and overloaded” to “proactive and adaptive” — which is exactly where regulators, customers, and your own risk teams increasingly expect you to be.